Introduction

ClickHouse has several backup solutions to restore the disk, database, table, and partition levels. You can access other detailed backup and restore solution documentation here and export/import documentation here. Today, we would like to talk about the “FREEZE” backup solution and its advantages and disadvantages.

What is the Freeze command in ClickHouse?

“Freeze” is an “ALTER TABLE” command to take local backup at the partition or table level. This query creates the backup of all related partitions. While the command was executed, the query created hard links for each partition’s data files with the same structure of directories. These hard links are located under “/var/lib/clickhouse/shadow/” folder. Because the “ALTER TABLE <DBNAME>.<TABLE_NAME> FREEZE PARTITION” command only backs up the data, if you would like to restore the table to the remote server, you need to copy both the data and metadata. The metadata SQL is located as “/var/lib/clickhouse/metadata/<database>/<table>.sql” for the table.

The sample freeze command is shown as below:

ALTER TABLE table_name [ON CLUSTER cluster] FREEZE [PARTITION partition_expr] [WITH NAME 'backup_name']

Here, if you don’t specify the “PARTITION” clause, it backs up the whole table.

How Does Freeze Make Use of Hard Links?

Every file on Linux has a single hard link at the beginning. The hard link is between the filename and the data stored on the filesystem. If you create an additional hard link, you create a new file that points to exactly the same data location as the initial file name. On the other hand, another type of Linux link is a soft link. In a soft link, linked files do not directly point to the data but to the file.

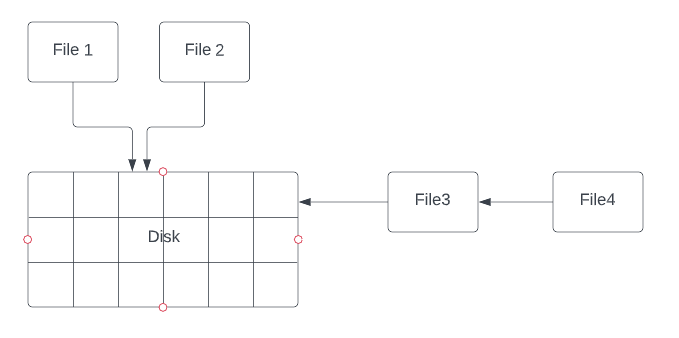

Fig. 1 Hard Link vs. Soft Link

As shown in Fig. 1, “File1” and “File2” point to the same data location, so a hard link is used. Moreover, “File4” points to “File3” (not the data itself) as a “soft link“.

ClickHouse uses hard links as the “freeze” backup method. The backed-up files point to the same data location as the original table or partition files at the beginning. If the original table or partition is modified, dropped or deleted, ClickHouse merges these changes at the file level in another disk location . It means backed-up data locations will not changed after hard links created by the “freeze” command.

Restore Data From Freeze

To restore partition or table from freeze backup, you need to follow the steps below:

- Create the table if it does not exist.

- Copy the data from source DB’s “/var/lib/clickhouse/shadow/…/” folder to the destination DB’s “/var/lib/clickhouse/data/<database>/<table>/detached/” folder as well.

- Execute “ALTER TABLE <DBNAME>.<TABLE_NAME> ATTACH PARTITION <PARTITON_NAME>” command to attach related partitions.

- Run “Unfreeze” command to delete backup file from source DB.

Scenarios for Freeze in ClickHouse

In this part, we’ll work on some backup scenarios with the examples. All of the following scenarios, ClickHouse 22.9.2 version is used.

Scenario 1

According to this scenario, we’ll use freeze on a single-node partitioned table.

For that reason, first we need to find a partitioned table. The following query shows us the partition id and count for a given table. You can choose any partitioned table for replaying scenarios.

SELECT

count(),

_partition_id

FROM RECMAN.queryLog

GROUP BY _partition_id

ORDER BY 2 ASC

┌─count()─┬─_partition_id─┐

│ 32 │ 20220928 │

│ 3 │ 20220929 │

│ 37 │ 20221006 │

│ 2 │ 20221007 │

│ 7 │ 20221008 │

│ 33 │ 20221009 │

│ 9 │ 20221010 │

│ 5 │ 20221011 │

│ 45 │ 20221012 │

│ 4 │ 20221013 │

│ 42 │ 20221014 │

│ 141 │ 20221016 │

│ 98 │ 20221017 │

└─────────┴───────────────┘

Let’s assume we choose “20221016” partition to backup with “freeze“. Here is the command for these operation.

ALTER TABLE <DBNAME>.<TABLENAME>

FREEZE PARTITION 20221016 WITH NAME 'recman_querylog_20221016_bck'

In our case, the command backs up the “RECMAN.queryLog” table under the following folder, but it’ll slightly change while you try.

“/var/lib/clickhouse/shadow/recman_querylog_20221016_bck/store/a30/a30d6951-0b46-456e-b4af-9fcfc099c935/20221016_12_12_0_14“

Then, it is time to drop “20221016” partition.

ALTER TABLE <DBNAME>.<TABLENAME>

DROP PARTITION '20221016';

There is no “20221016” partition in the “RECMAN.queryLog” table right now. Let’s try to restore that partition. For that reason, first of all, we need to copy backed-up partition data to the “detached” folder. We used “rsync” for that operation, but you can use “scp“, “tar” or any other Linux utility to do that.

root@clickhouse01:/var/lib/clickhouse/shadow/recman_querylog_20221016_bck/store/a30/a30d6951-0b46-456e-b4af-9fcfc099c935# rsync -a 20221016_12_12_0_14 /var/lib/clickhouse/data/RECMAN/queryLog/detached/ root@clickhouse01:/var/lib/clickhouse/shadow/recman_querylog_20221016_bck/store/a30/a30d6951-0b46-456e-b4af-9fcfc099c935# ls -al /var/lib/clickhouse/data/RECMAN/queryLog/detached/ total 12 drwxr-x--- 3 clickhouse clickhouse 4096 Nov 9 09:57 . drwxr-x--- 15 clickhouse clickhouse 4096 Nov 8 10:05 .. drwxr-x--- 2 clickhouse clickhouse 4096 Nov 9 09:48 20221016_12_12_0_14

In this example, we are trying to restore a partition to the same table on the same server, so there is no need to create a table. On the other hand, if you need to restore data to the remote server, you must create a table and migrate backed-up data with a folder to the destination table’s “detached” folder.

Now it is time to attach dropped partition.

ALTER TABLE <DBNAME>.<TABLENAME>

ATTACH PARTITION 20221016

The final step is to check the partition to see if it was truly restored.

SELECT count() FROM <DBNAME>.<TABLENAME> WHERE _partition_id = '20221016' Query id: 4bf6bb45-de53-4d16-b63e-0801972a4cce ┌─count()─┐ │ 141 │ └─────────┘

Scenario 2

In this scenario, we’ll try to back up the non-partitioned table and restore it to a different table on the single node.

First of all, we need to find a non-partitioned table. In the scope of this scenario, we’ll use “opensky” table, but you can also use any non-partitioned table. In ClickHouse, even if any “MergeTree” table is created without a “partition” clause, data may be distributed over different folders. We call these “parts”. In the following query, the “sampledataset.opensky” table has 4 parts. It may be different in your tests.

SELECT

partition,

name,

database,

table

FROM system.parts

WHERE (database = 'sampleDatasets') AND (table = 'opensky')

Query id: 26f3d034-cde7-46fc-8f2e-237550eafda3

┌─partition─┬─name──────┬─database───────┬─table───┐

│ tuple() │ all_1_1_1 │ sampleDatasets │ opensky │

│ tuple() │ all_2_2_1 │ sampleDatasets │ opensky │

│ tuple() │ all_3_3_0 │ sampleDatasets │ opensky │

│ tuple() │ all_4_4_0 │ sampleDatasets │ opensky │

└───────────┴───────────┴────────────────┴─────────┘

It is time to backup table with the “freeze” command. We did not use the “partition” clause, so it backed-up whole table data as well.

ALTER TABLE sampleDatasets.opensky

FREEZE WITH NAME 'sampledataset_opensky_bck'

As you can see, all parts were backed up under “/var/lib/clickhouse/shadow”. The whole path should be different on your own tests.

root@clickhouse01:/var/lib/clickhouse/shadow/sampledataset_opensky_bck/store/1af/1afc664b-0a25-443a-a317-532532434753# ls -al total 24 drwxr-x--- 6 clickhouse clickhouse 4096 Nov 9 11:04 . drwxr-x--- 3 clickhouse clickhouse 4096 Nov 9 11:04 .. drwxr-x--- 2 clickhouse clickhouse 4096 Nov 9 11:04 all_1_1_1 drwxr-x--- 2 clickhouse clickhouse 4096 Nov 9 11:04 all_2_2_1 drwxr-x--- 2 clickhouse clickhouse 4096 Nov 9 11:04 all_3_3_0 drwxr-x--- 2 clickhouse clickhouse 4096 Nov 9 11:04 all_4_4_0

Then, we need to create a new table with the same metadata as the source table. We can change the table name. Also, we can create tables on a local or remote server. You can find create table script in both “show create table” command or SQL statement under “/var/lib/clickhouse/metadata/<database>/<table>.sql“.

>SHOW CREATE TABLE sampleDatasets.opensky

│ CREATE TABLE sampleDatasets.opensky

(

`callsign` String,

`number` String,

`icao24` String,

`registration` String,

`typecode` String,

`origin` String,

`destination` String,

`firstseen` DateTime,

`lastseen` DateTime,

`day` DateTime,

`latitude_1` Float64,

`longitude_1` Float64,

`altitude_1` Float64,

`latitude_2` Float64,

`longitude_2` Float64,

`altitude_2` Float64

)

ENGINE = MergeTree

ORDER BY (origin, destination, callsign)

SETTINGS index_granularity = 8192 │

Let’s create a table with a new name with this script’s help.

CREATE TABLE sampleDatasets.opensky_freeze_restore

(

`callsign` String,

`number` String,

`icao24` String,

`registration` String,

`typecode` String,

`origin` String,

`destination` String,

`firstseen` DateTime,

`lastseen` DateTime,

`day` DateTime,

`latitude_1` Float64,

`longitude_1` Float64,

`altitude_1` Float64,

`latitude_2` Float64,

`longitude_2` Float64,

`altitude_2` Float64

)

ENGINE = MergeTree

ORDER BY (origin, destination, callsign)

SETTINGS index_granularity = 8192

After that, it is time to copy all parts to the destination table’s detached folder.

root@clickhouse01:/var/lib/clickhouse/shadow/sampledataset_opensky_bck/store/.../...# ls -l total 16 drwxr-x--- 2 clickhouse clickhouse 4096 Nov 9 11:04 all_1_1_1 drwxr-x--- 2 clickhouse clickhouse 4096 Nov 9 11:04 all_2_2_1 drwxr-x--- 2 clickhouse clickhouse 4096 Nov 9 11:04 all_3_3_0 drwxr-x--- 2 clickhouse clickhouse 4096 Nov 9 11:04 all_4_4_0 > rsync -a * /var/lib/clickhouse/data/sampleDatasets/opensky_freeze_restore/detached/

Finally, attach parts to the destination table one by one.

alter table sampleDatasets.opensky_freeze_restore attach part 'all_1_1_1'; alter table sampleDatasets.opensky_freeze_restore attach part 'all_2_2_1'; alter table sampleDatasets.opensky_freeze_restore attach part 'all_3_3_0'; alter table sampleDatasets.opensky_freeze_restore attach part 'all_4_4_0';

We have also tested the “freeze” command in sharded tables, partitioned and non-partitioned. You can access these tests from here.

They both work fine, too.

Conclusion

The “freeze” command should be used to back up tables and partitions both in a single node and sharded clusters. Because of the use of hard links, the backup time is shorter than the other methods. My findings about this method are :

- It shortens the backup time.

- This method does not copy the metadata information, so if the table and/or database do not exist on the destination server, we need to create them.

- Freeze makes copies of related tables and data files, and folders under “/var/lib/clickhouse/shadow” folder. The administrator is responsible for moving whole backed-up folders to the destination table’s detached directory.

- In the destination database, it is impossible to attach multiple partitions or parts simultaneously. The administrator needs to run “attach” commands for all partitions or parts one by one.

According to the findings, this method should be useful for one-time table migration, but it is very hard to manage daily backup operations with “freeze.”

To learn more about Backup & Restore techniques in ClickHouse, read the following articles: