Introduction

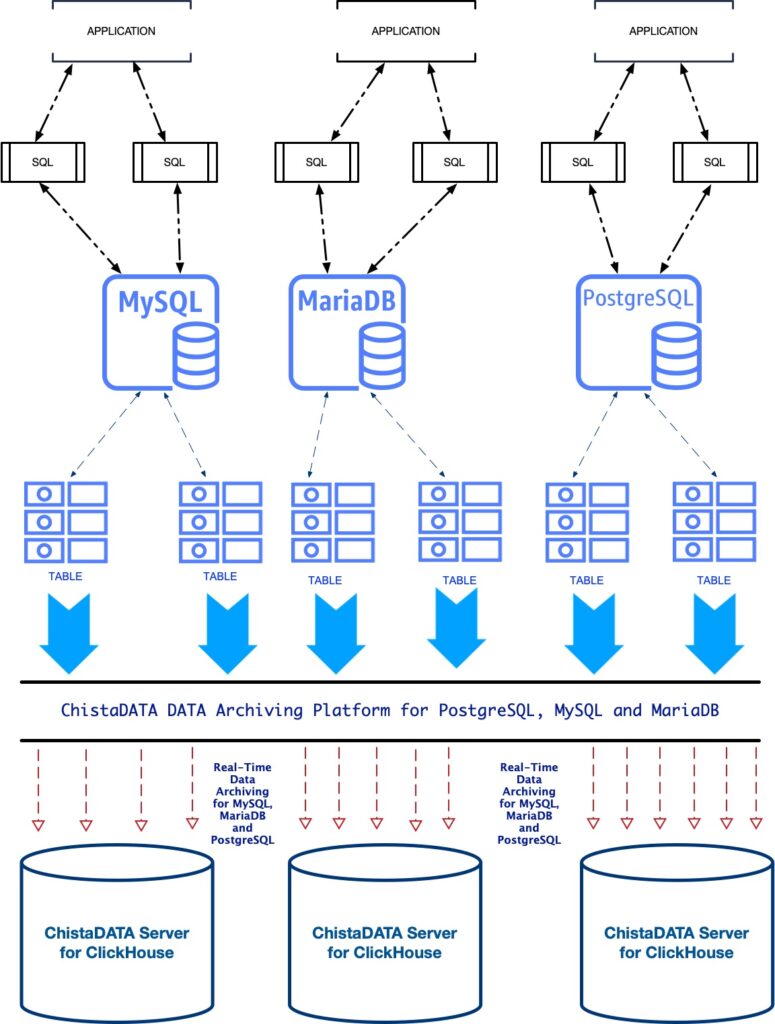

ClickHouse is a versatile database management system known for its real-time analytics capabilities. However, it offers more than just real-time analytics. ClickHouse can also serve as an archive store for transaction computing systems or relational database management systems (RDBMS). This is primarily due to its exceptional performance, scalability, and reliability.

ClickHouse’s design and architecture make it well-suited for efficiently handling vast data. It employs columnar storage, which enables efficient compression and quick data retrieval. This makes it ideal for storing and querying large volumes of historical data.

In addition, ClickHouse’s scalability is impressive. It can handle massive workloads and scale horizontally by distributing data across multiple servers. This allows organizations to easily expand their data storage and processing capabilities as their needs grow.

Moreover, ClickHouse is renowned for its reliability. It offers features like replication, sharding, and fault-tolerant design, ensuring data durability and high availability. This makes it suitable for critical transaction computing systems requiring performance and data integrity.

By leveraging ClickHouse as an archive store for transaction computing systems or RDBMS, organizations can benefit from its exceptional performance in handling analytical queries while retaining historical data for compliance or reference purposes. It provides a cost-effective and efficient solution for managing large volumes of data over time.

Process of Archiving Data to ClickHouse

- Data Selection: Identify the data in PostgreSQL, MySQL, or MariaDB that needs to be archived. This typically includes historical or less frequently accessed data that is no longer actively used in transactional operations.

- Data Extraction: Extract the selected data from the source database. This can be done using various methods, such as SQL queries or ETL processes, depending on the database technology and the specific data extraction requirements.

- Data Transformation and Formatting: Convert the extracted data into a format suitable for ClickHouse. This may involve transforming the data schema, adjusting data types, and ensuring compatibility with ClickHouse’s columnar storage format.

- Data Loading into ClickHouse: Utilize ClickHouse’s native data ingestion mechanisms, such as the ClickHouse SQL interface, ClickHouse client libraries, or external data integration tools, to load the archived data into ClickHouse tables. ClickHouse’s high-speed data loading capabilities ensure efficient and fast data ingestion.

- Indexing and Query Optimization: Create appropriate indexes on the archived data in ClickHouse to optimize query performance. Analyze the query patterns and design indexes that align with the specific analytical requirements of the archived data.

- Data Retention and Archiving Strategy: Define a data retention policy and archiving strategy based on the organization’s specific needs. This includes determining the duration of data retention in ClickHouse and establishing periodic archiving processes to ensure efficient archived data management.

- Data Access and Analytics: Leverage ClickHouse’s powerful SQL capabilities, analytical functions, and data manipulation tools to perform advanced analytics on archived data. ClickHouse’s real-time query processing capabilities enable organizations to gain valuable insights from historical data for decision-making and business intelligence purposes.

Unleashing Data-driven Insights in Banking: ClickHouse for Real-Time Analytics and Efficient Archive Storage

In the banking and financial services sector, ClickHouse can be utilized to streamline data management processes and improve decision-making capabilities. One practical use case involves utilizing ClickHouse for real-time analytics and archive storage.

Real-Time Analytics: By implementing ClickHouse, banks can analyze and gain valuable real-time insights from their transactional data. They can monitor customer transactions, detect fraudulent activities, identify patterns for risk assessment, and generate customized reports and dashboards for timely decision-making. ClickHouse’s performance and scalability enable fast querying and analysis of large volumes of data, empowering banks to make informed and proactive business decisions.

Archive Storage: Banks must store transactional data for compliance and historical reference. ClickHouse’s columnar storage and compression capabilities make it ideal for efficiently storing and managing vast amounts of historical data. Banks can use ClickHouse as an archive store to ensure data integrity, scalability, and reliability while optimizing storage costs. They can retrieve archived data when needed, perform historical trend analysis, and meet regulatory requirements effortlessly.

Overall, integrating ClickHouse into banking and financial services businesses allows them to harness the power of real-time analytics for operational insights and leverage reliable archive storage for compliance and historical purposes. This enables banks to enhance their data management capabilities, improve decision-making processes, and ensure regulatory compliance cost-effectively.

Conclusion

In summary, ClickHouse’s utilization extends beyond real-time analytics. Its performance, scalability, and reliability make it a powerful choice as an archive store for transaction computing systems or RDBMS, enabling organizations to effectively achieve analytics and long-term data storage goals.

To know more about Clickhouse as an Archival Store, do read the following article: