Introduction

A Bloom filter is a data structure that tells the user whether a particular item is part of a set. Although it cannot definitively tell if an item is in the set, it can definitely report if that item is not in the set.

The Bloom Filter is a probability-based data structure invented by Burton Howard Bloom in 1970. With this data structure, it is possible to query whether an element is in a cluster or not at a low cost. Created by Burton Howard Bloom in 1970, the Bloom filter is used in most applications due to its efficiency in storage space usage. For some cryptocurrencies (especially Bitcoin), Simplified Payment Verification, or SPV (Simplified Payment Verification) applications, Bloom filters are a must.

For example; Let’s assume that in a stream, data in the form of John, Sofia, Paul, Fernando, etc come in order. And let’s look at whether the name Simon appears in this dataset.

Approach 1: Using a Dictionary

In this approach, using a dictionary(HashMap, etc.), each different value is first checked to see if it exists in the dictionary. If the incoming value has passed before, it is in the dictionary. Otherwise, the queried value has never been passed before in the streaming dataset. Since Dictionary-like data structures also use hash algorithms in the background, the problem can be solved with a single hash operation. In other words, in terms of computational complexity, it can be thought of as a simple problem that can be solved in O(1) complexity.

However, what would be the scenario that we will encounter if the problem of whether a searched data is passed in the flowing data is tried to be implemented in an application where several million events are processed per second and running under heavy load? Probably our memory consumption will increase considerably and maybe our program will be sent off to infinity by the operating system due to lack of memory.

Approach 2: Using a Bloom Filter

Bloom filter is a bit array that records the results of more than one hash value in bits in terms of working dynamics. Using Bloom filters, we can find out if a value is passed in very large datasets. Basically, bloom filters can be described as a probabilistic data structure. As it is a probabilistic structure, there are cases where it does not work deterministically. Namely;

If the bloom filter returns a value passing, the value may or may not pass.

However, if the bloom filter says that a value is not passed, that value is definitely not in the dataset.

Bloom Filter Working Mechanism

After defining the problem and possible solution methods, let’s come to the working logic of the bloom filter. In order to understand the Bloom filter in depth, it is necessary to master the concept of hashing first. To define it briefly, the hash operation can be defined as the equivalent (summary) of data in an arbitrary size array.

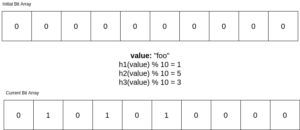

STEP 1: Create the Filter

As the first step in creating a Bloom filter, an array containing N elements and whose elements are initially 0 is defined.

STEP 2: Create Hash Functions

M separate hash functions are created.

STEP 3: Create Filter with Big Dataset

Each element in the streaming data is passed through M hashes. The mode of each of the hash values is taken according to N and the element in the resulting index value is set as 1.

STEP4: Query Desired Value

The data to be queried is passed through M hash algorithms and the indices are found by taking the mode according to N. If even one of the values in the found indices is 0, it means that the queried value is definitely not in the dataset.

Test dataset design via ClickHouse

Create a table with a single column containing 16-character long random strings:

create table test engine = MergeTree ORDER BY tuple() as select substring(replaceRegexpAll(randomPrintableASCII(100), '[^a-zA-Z0-9]', ''), 1, 16) as s from numbers_mt(20500000) where length(s) = 16 LIMIT 20000000;

Check its properties:

SELECT

min(length(s)),

max(length(s)),

count(),

uniqExact(s)

FROM test

┌─min(length(s))─┬─max(length(s))─┬──count()─┬─uniqExact(s)─┐ │ 16 │ 16 │ 20000000 │ 20000000 │ └────────────────┴────────────────┴──────────┴──────────────┘

Our table has exactly 20 million unique random strings, each exactly 16 characters long and only alphanumerical characters.

Because each value is unique, within each granule of 8192 rows, there will be exactly 8192 unique elements in each granule.

We will be using tokenbf_v1 index, because it allows us to tune all parameters of bloom filters. It actually tokenizes the string, but since our strings contain only alphanumeric characters, every row / string will have exactly 1 token.

Impact of number of hashes

Create tables with the same bloom filter size and a different number of hash functions.

CREATE TABLE bf_no_index ( `s` String ) ENGINE = MergeTree ORDER BY tuple(); CREATE TABLE bf_8192_7 ( `s` String, index bf s TYPE tokenbf_v1(8192, 7, 0) GRANULARITY 1 ) ENGINE = MergeTree ORDER BY tuple(); CREATE TABLE bf_8192_8 ( `s` String, index bf s TYPE tokenbf_v1(8192, 8, 0) GRANULARITY 1 ) ENGINE = MergeTree ORDER BY tuple(); CREATE TABLE bf_8192_1 ( `s` String, index bf s TYPE tokenbf_v1(8192, 1, 0) GRANULARITY 1 ) ENGINE = MergeTree ORDER BY tuple(); CREATE TABLE bf_8192_2 ( `s` String, index bf s TYPE tokenbf_v1(8192, 2, 0) GRANULARITY 1 ) ENGINE = MergeTree ORDER BY tuple(); CREATE TABLE bf_8192_3 ( `s` String, index bf s TYPE tokenbf_v1(8192, 3, 0) GRANULARITY 1 ) ENGINE = MergeTree ORDER BY tuple(); CREATE TABLE bf_8192_4 ( `s` String, index bf s TYPE tokenbf_v1(8192, 4, 0) GRANULARITY 1 ) ENGINE = MergeTree ORDER BY tuple(); CREATE TABLE bf_8192_5 ( `s` String, index bf s TYPE tokenbf_v1(8192, 5, 0) GRANULARITY 1 ) ENGINE = MergeTree ORDER BY tuple(); CREATE TABLE bf_8192_6 ( `s` String, index bf s TYPE tokenbf_v1(8192, 6, 0) GRANULARITY 1 ) ENGINE = MergeTree ORDER BY tuple();

Check how these indices are displayed in the system.data_skipping_indices table:

| table | data_compressed_bytes | data_uncompressed_bytes |

|---|---|---|

| bf_8192_1 | 17474135 | 20013056 |

| bf_8192_2 | 20082538 | 20013056 |

| bf_8192_3 | 20097632 | 20013056 |

| bf_8192_4 | 20098229 | 20013056 |

| bf_8192_5 | 20098727 | 20013056 |

| bf_8192_6 | 20099313 | 20013056 |

| bf_8192_7 | 20099477 | 20013056 |

| bf_8192_8 | 20099494 | 20013056 |

You can see that the compression ratio for them is low. This is expected since bloom filter vectors have random properties if they have optimal size.

Check what the insert speed looks like in these tables:

INSERT INTO bf_no_index SELECT * FROM source; -- Elapsed: 1.998 sec. INSERT INTO bf_8192_1 SELECT * FROM source; -- Elapsed: 2.888 sec. INSERT INTO bf_8192_2 SELECT * FROM source; -- Elapsed: 3.051 sec. INSERT INTO bf_8192_3 SELECT * FROM source; -- Elapsed: 3.234 sec. INSERT INTO bf_8192_4 SELECT * FROM source; -- Elapsed: 3.413 sec. INSERT INTO bf_8192_5 SELECT * FROM source; -- Elapsed: 3.567 sec. INSERT INTO bf_8192_6 SELECT * FROM source; -- Elapsed: 3.800 sec. INSERT INTO bf_8192_7 SELECT * FROM source; -- Elapsed: 4.035 sec. INSERT INTO bf_8192_8 SELECT * FROM source; -- Elapsed: 4.221 sec.

As you can see, the insertion speed predictably becomes lower with the addition of a bloom filter and with an increase in the number of hash functions. It is noticeable that the addition of the index itself slows down the storage of the column by almost 45%, and each additional hash function adds another 8% slowdown

Now let’s look at the read speed:

Reading speed was tested with the following script:

echo "select count() from bf_tests.bf_no_index where s = 'YMBTGV0R9N3RInZFOYcNkky4UOeiY2dH'" | clickhouse-benchmark -i 100 -c 1

The number of skipped granules was taken from the query execution log.

| table | granulas skipped (from 2443) | best query time (sec) | row read (thousands) | MB read | QPS |

|---|---|---|---|---|---|

| bf_no_index | 0 | 0.110 | 20000 | 820.00 | 8.001 |

| bf_8192_1 | 2158 | 0.031 | 2330 | 95.72 | 23.873 |

| bf_8192_2 | 2319 | 0.016 | 1020 | 41.65 | 54.591 |

| bf_8192_3 | 2370 | 0.012 | 598.02 | 24.52 | 73.524 |

| bf_8192_4 | 2373 | 0.010 | 573.44 | 23.51 | 80.132 |

| bf_8192_5 | 2380 | 0.011 | 516.10 | 21.16 | 80.615 |

| bf_8192_6 | 2383 | 0.011 | 491.52 | 20.15 | 83.641 |

| bf_8192_7 | 2377 | 0.010 | 540.67 | 22.17 | 86.980 |

| bf_8192_8 | 2371 | 0.011 | 589.82 | 24.18 | 74.192 |

As you can see, the reading speed directly depends on the number of “skipped” granules and the number of skipped granules behaves in accordance with the theoretical predictions obtained from the formulas.

Conclusion

After giving information about Bloom filters, we made an example by defining a dataset on ClickHouse and creating an index on this set. Insert and read speeds have dropped considerably with the addition of bloom filters.

For more information about the bloom filters please visit here and the original documentation.

To read more about using ClickHouse Indexes for Performance, do consider reading the below articles: