When Not to Use ClickHouse: Understanding the Limits of a Powerful Analytics Engine

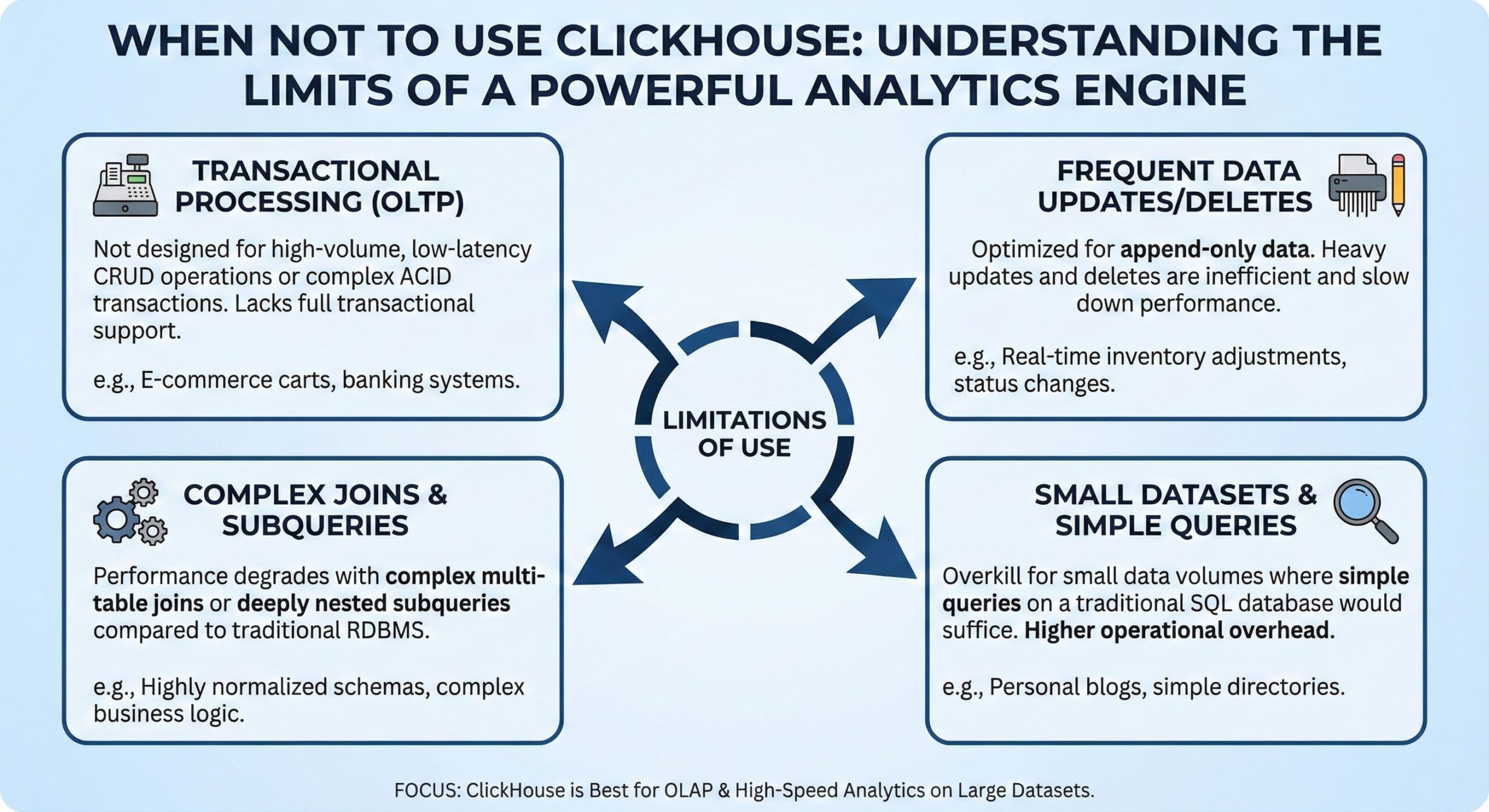

ClickHouse has emerged as a powerhouse in the world of analytics databases, renowned for its blistering speed in processing large-scale analytical queries. Developed by Yandex for its web analytics product, ClickHouse excels at crunching billions of rows to deliver insights in milliseconds. Its columnar storage, vectorized query execution, and efficient compression make it a top choice for real-time analytics, time-series analysis, and business intelligence workloads. However, despite its impressive capabilities, ClickHouse is not a universal solution. Like any specialized tool, it has specific design trade-offs that make it unsuitable for certain use cases. Understanding when not to use ClickHouse is just as important as knowing when to use it. Deploying ClickHouse in inappropriate scenarios can lead to performance bottlenecks, operational complexity, and increased costs. This article outlines the key situations where alternative database solutions should be strongly considered.

1. Transactional Workloads (OLTP)

ClickHouse is fundamentally designed for Online Analytical Processing (OLAP), not Online Transaction Processing (OLTP). This distinction is critical. OLTP systems handle a high volume of short, atomic transactions—think user logins, e-commerce purchases, or bank transfers—where data consistency, low-latency writes, and point lookups are paramount. ClickHouse, by contrast, is optimized for high-throughput, read-heavy analytical queries over massive datasets.

The architecture of ClickHouse favors bulk inserts over individual row operations. While it can handle inserts, it is most efficient when data is written in large batches (thousands or millions of rows at a time). Frequent single-row inserts or updates generate significant overhead due to the way ClickHouse manages its data parts and merges them in the background. This can lead to fragmentation and degraded performance over time.

Moreover, ClickHouse does not support traditional ACID transactions in the way that OLTP databases like PostgreSQL, MySQL, or Oracle do. It lacks row-level locking and does not provide immediate consistency across concurrent writes. While it offers eventual consistency through its asynchronous merge process, this is insufficient for applications requiring strict transactional integrity. For example, in a banking application, ensuring that a withdrawal and corresponding balance update occur atomically is non-negotiable—ClickHouse cannot guarantee this.

If your application revolves around user interactions, inventory management, or any system requiring real-time data modifications with strong consistency, a traditional relational database or a modern NewSQL database like Google Cloud Spanner or CockroachDB is a far better fit.

2. Point Queries and Row-Based Lookups

Another area where ClickHouse underperforms is in point queries—retrieving a single row or a small number of rows based on a specific key. For instance, fetching a user profile by user ID or looking up a specific transaction record. ClickHouse’s columnar storage, while excellent for scanning and aggregating data across millions of rows, is inefficient for retrieving all columns of a single row.

In a columnar database, each column is stored separately. To retrieve a complete row, the system must read from multiple column files, which involves more disk seeks and I/O operations compared to a row-based store where all data for a row is stored contiguously. Although ClickHouse supports primary indexes, they are sparse and designed to skip large blocks of data during scans, not to enable O(1) lookups. This means that even with an index, ClickHouse may need to scan thousands of rows to find a match.

For applications that rely heavily on key-value lookups or require sub-millisecond response times for individual records, a key-value store like Redis, a document database like MongoDB, or a wide-column store like Apache Cassandra is more appropriate. These systems are engineered for low-latency, high-concurrency access to individual data items. Using ClickHouse for such tasks would result in unnecessarily high latency and resource consumption.

3. High-Frequency Updates and Deletes

ClickHouse’s design assumes that data is largely immutable once written. While it does support UPDATE and DELETE operations, they are implemented as background merges rather than in-place modifications. When you issue a DELETE statement, ClickHouse does not immediately remove the data; instead, it marks the rows for deletion and processes the operation during the next merge of data parts. This makes deletes slow and resource-intensive, especially on large tables.

Similarly, updates are handled by creating new versions of the affected data parts, which are then merged later. This approach, known as a merge tree, is efficient for append-heavy workloads but becomes problematic when updates are frequent. The constant creation of new data parts can lead to excessive disk I/O, increased storage usage, and longer query times due to the need to scan more parts.

For use cases involving rapidly changing data—such as real-time bidding systems, stock trading platforms, or collaborative editing applications—ClickHouse is not suitable. These scenarios require immediate and efficient updates to existing records. A database with in-place update capabilities, such as PostgreSQL with its MVCC (Multi-Version Concurrency Control) or a specialized real-time database, would be a better choice. Attempting to use ClickHouse in such environments would result in poor performance and operational headaches.

4. Schema Flexibility and Rapid Iteration

ClickHouse requires a predefined schema, and while it supports some level of schema evolution (such as adding columns), it is not as flexible as schema-less or schema-on-read databases. Altering a schema in ClickHouse, especially on large tables, can be a slow and disruptive operation. For example, changing the data type of a column or removing a column involves rewriting the entire table, which can take hours or even days for petabyte-scale datasets.

This lack of agility makes ClickHouse less ideal for environments where the data model evolves rapidly, such as in early-stage startups, prototyping, or exploratory data analysis. In contrast, NoSQL databases like MongoDB or cloud data lakes using Parquet/ORC files with schema-on-read capabilities allow for greater flexibility. You can ingest semi-structured data (like JSON) without defining a rigid schema upfront and adapt the structure as your understanding of the data evolves.

Additionally, ClickHouse’s strong typing and strict schema enforcement can be a barrier when dealing with heterogeneous or inconsistent data sources. If your application needs to handle varying data formats or frequent schema changes, the operational overhead of managing ClickHouse schemas may outweigh its performance benefits.

5. General-Purpose Database Needs

ClickHouse should not be viewed as a replacement for a general-purpose relational database. It lacks many features that are standard in traditional RDBMS systems, such as foreign key constraints, complex joins with high efficiency, stored procedures, and triggers. While ClickHouse can perform joins, it is optimized for star or snowflake schemas with one large fact table and several small dimension tables. Performing complex joins on large tables can be slow and memory-intensive.

Furthermore, ClickHouse does not support standard SQL features like transactions, rollbacks, or updatable views. It also has limited support for window functions compared to databases like PostgreSQL or Snowflake. If your application requires a rich set of SQL functionality, complex data integrity rules, or a wide variety of query patterns, a full-featured RDBMS is a better foundation.

Using ClickHouse as a primary database for a web application—handling both user data and analytics—would be a mistake. A more effective architecture is to use a transactional database for the operational workload and replicate or batch-load data into ClickHouse for analytical queries. This separation of concerns allows each system to operate in its area of strength.

6. Small Datasets and Low Query Volumes

ClickHouse’s performance advantages are most apparent when dealing with large datasets—typically millions or billions of rows. For small datasets (e.g., thousands or tens of thousands of rows), the overhead of setting up and maintaining a ClickHouse cluster may not be justified. Traditional databases can easily handle such workloads with excellent performance and much simpler operations.

The complexity of ClickHouse—its distributed architecture, configuration options, and operational requirements—introduces unnecessary friction for small-scale applications. The time and resources spent on deployment, monitoring, and tuning would be better invested elsewhere. Moreover, the query latency for small datasets on a traditional database is often comparable to or even better than ClickHouse, especially for point queries.

If your data size is modest and your analytical needs are light, consider lightweight solutions like SQLite for embedded analytics, or even in-memory processing with Pandas in Python. For web applications, leveraging the existing application database with proper indexing may suffice. ClickHouse’s value proposition diminishes significantly when the data volume doesn’t stress the capabilities of simpler systems.

7. Real-Time Stream Processing with Stateful Operations

While ClickHouse can ingest data from streaming sources like Kafka or RabbitMQ, it is not a stream processing engine. It does not provide native support for stateful operations such as windowed aggregations, sessionization, or complex event processing. These tasks require maintaining state over time, which is outside ClickHouse’s scope.

For real-time analytics that require processing data streams with low latency and stateful logic, dedicated stream processing frameworks like Apache Flink, Apache Spark Streaming, or Kafka Streams are more appropriate. These systems can perform complex transformations, maintain state across events, and emit results to various sinks—including ClickHouse. A common pattern is to use Flink for real-time stream processing and write the aggregated results into ClickHouse for fast querying and visualization.

Attempting to implement stream processing logic directly in ClickHouse—such as simulating sliding windows with frequent queries—would be inefficient and impractical. It would place undue load on the database and likely fail to meet real-time requirements. ClickHouse should be seen as a sink for processed data, not the processor itself.

8. High Availability and Disaster Recovery Complexity

Although ClickHouse supports replication and sharding for high availability, setting up and managing a resilient ClickHouse cluster requires significant expertise. It relies on external systems like ZooKeeper (or ClickHouse Keeper) for coordination, which adds operational complexity. Misconfigurations can lead to data loss or cluster instability.

Compared to managed database services like Amazon RDS, Google Cloud SQL, or even managed analytics platforms like Snowflake or BigQuery, ClickHouse demands more hands-on administration. Tasks such as backup, restore, failover, and scaling require careful planning and scripting. While there are managed ClickHouse offerings (e.g., ClickHouse Cloud, Altinity Cloud), they may not provide the same level of automation and ease of use as other cloud-native databases.

For organizations without dedicated database administrators or those prioritizing operational simplicity, the overhead of managing ClickHouse may be prohibitive. In such cases, opting for a fully managed analytics solution that handles infrastructure, scaling, and availability transparently is often a better choice, even if it comes at a higher cost.

9. Data Warehousing with Complex ETL Pipelines

ClickHouse can serve as a data warehouse, but it lacks built-in ETL (Extract, Transform, Load) capabilities. Unlike platforms like Snowflake or Amazon Redshift, which offer integrated tools for data transformation, scheduling, and orchestration, ClickHouse is purely a storage and query engine. You must implement ETL pipelines externally using tools like Apache Airflow, dbt, or custom scripts.

This separation is not inherently bad—it promotes flexibility and allows you to choose the best tools for each stage of the pipeline. However, it does mean that building a complete data platform with ClickHouse requires more integration work. You need to manage data ingestion, transformation logic, error handling, and monitoring across multiple systems.

For teams seeking an all-in-one solution with minimal integration effort, a managed data warehouse with native ETL support may be more efficient. ClickHouse is best suited for organizations that already have strong data engineering practices and prefer to build custom pipelines with maximum control over performance and cost.

Conclusion

ClickHouse is an exceptional tool for specific analytical workloads, particularly those involving large volumes of immutable data and complex aggregations. Its speed and efficiency have made it a favorite among data engineers and analysts. However, it is not a one-size-fits-all database. Its strengths in OLAP come at the cost of limitations in transactional processing, point queries, frequent updates, and operational simplicity.

Before adopting ClickHouse, carefully evaluate your use case against its design principles. If your application requires real-time transactions, frequent data modifications, flexible schemas, or low-latency key-value lookups, consider alternative databases that align better with those needs. A hybrid architecture—using ClickHouse alongside other databases—often provides the best of both worlds, leveraging ClickHouse for analytics while relying on specialized systems for operational workloads.

In summary, ClickHouse shines when used for what it was designed for: fast, scalable analytics on large datasets. Recognizing its limitations ensures that you make informed decisions and build robust, efficient data systems that meet your business requirements without unnecessary complexity.

Further Reading

MergeTree Settings: Tuning for Insert Performance vs Query Speed

Monitoring Merge Queues in ClickHouse

Setting up Alerts in ClickHouse for Performance Troubleshooting