Master ClickHouse Custom Partitioning Keys: Optimize Performance and Reduce Costs

Unlock the full potential of your ClickHouse database with strategic custom partitioning – A comprehensive guide from ChistaDATA’s experts

When it comes to maximizing ClickHouse performance, custom partitioning keys are one of the most powerful yet underutilized optimization techniques. At ChistaDATA, we’ve helped hundreds of organizations achieve dramatic performance improvements and cost reductions through strategic partitioning strategies. This comprehensive guide reveals the secrets to implementing custom partitioning keys that transform your ClickHouse deployment.

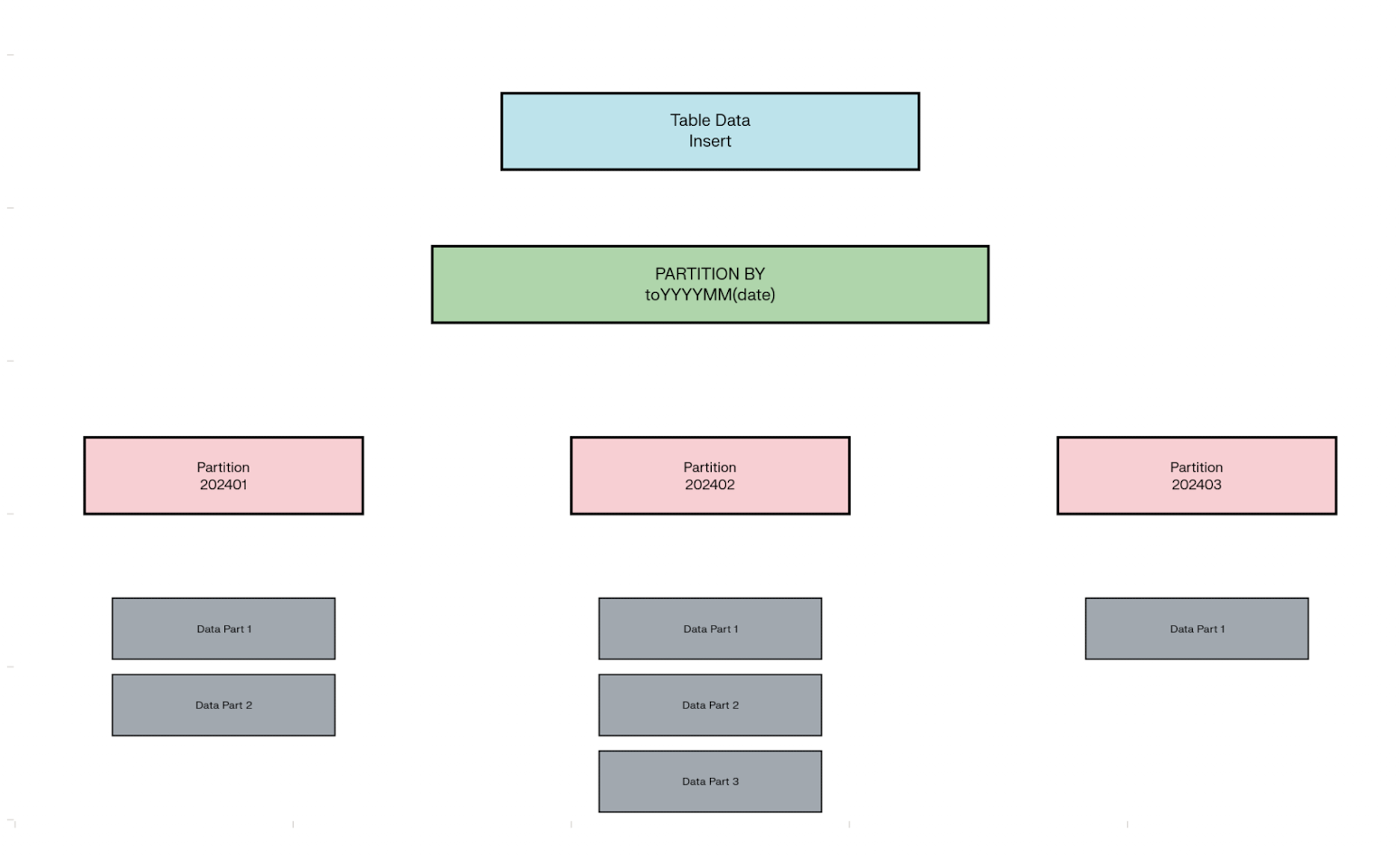

What Are Custom Partitioning Keys in ClickHouse?

Custom partitioning keys in ClickHouse are user-defined expressions that determine how data is physically organized and stored across different partitions. Unlike default partitioning schemes, custom keys allow you to tailor data distribution based on your specific query patterns, data characteristics, and business requirements.

Why Default Partitioning Falls Short

Most ClickHouse deployments rely on basic date-based partitioning, but this approach often leads to:

- Uneven data distribution across partitions

- Suboptimal query performance for non-temporal queries

- Inefficient resource utilization during data processing

- Higher storage costs due to poor compression ratios

Optimal Cardinality Guidelines

Choose a partition key with low cardinality – ideally in the dozens to hundreds range, and generally not more than 1,000 distinct values. High-cardinality partition keys lead to a proliferation of data parts that can trigger the dreaded “Too many parts” error.

-- ✅ RECOMMENDED: Monthly partitioning

CREATE TABLE user_events (

event_date Date,

user_id UInt64,

event_type String,

event_data String

) ENGINE = MergeTree()

PARTITION BY toYYYYMM(event_date)

ORDER BY (user_id, event_date);

-- ❌ WRONG: Too granular partitioning

CREATE TABLE user_events_bad (

event_date Date,

user_id UInt64,

event_type String

) ENGINE = MergeTree()

PARTITION BY user_id -- This creates millions of partitions!

ORDER BY event_date;

The Power of Strategic Custom Partitioning

Performance Benefits

Custom partitioning keys deliver measurable performance improvements:

- 10-100x faster query execution for partition-aligned queries

- Reduced I/O operations through partition pruning

- Improved parallel processing across multiple partitions

- Enhanced compression ratios within homogeneous partitions

Cost Optimization

Strategic partitioning directly impacts your bottom line:

- Reduced storage costs through better compression

- Lower compute expenses via faster query execution

- Decreased network traffic through partition elimination

- Optimized resource allocation across cluster nodes

Common Custom Partitioning Strategies

1. Multi-Dimensional Partitioning

Combine multiple columns for optimal data distribution:

PARTITION BY (toYYYYMM(date), customer_tier, region)

2. Hash-Based Partitioning

Ensure even distribution across partitions:

PARTITION BY intHash64(user_id) % 100

3. Business Logic Partitioning

Align partitions with business requirements:

PARTITION BY (product_category, price_range)

4. Time-Series Optimization

Custom time-based partitioning for specific use cases:

PARTITION BY (toStartOfWeek(timestamp), event_type)

Industry-Specific Partitioning Patterns

E-commerce Analytics

- Customer segmentation: Partition by customer tier and geographic region

- Product analysis: Organize by product category and price range

- Sales optimization: Structure by sales channel and time period

Financial Services

- Risk management: Partition by risk level and asset class

- Compliance reporting: Organize by regulatory jurisdiction and transaction type

- Real-time monitoring: Structure by account type and transaction amount

IoT and Manufacturing

- Sensor data: Partition by device type and location

- Predictive maintenance: Organize by equipment category and operational status

- Quality control: Structure by production line and quality metrics

Best Practices for Custom Partitioning Keys

1. Analyze Query Patterns

Before implementing custom partitioning:

- Identify frequent query filters in your workload

- Analyze data access patterns across different dimensions

- Understand cardinality of potential partition keys

- Consider future query requirements and data growth

2. Balance Partition Size

Optimal partition sizing is crucial:

- Target 1-10GB per partition for most use cases

- Avoid too many small partitions (overhead issues)

- Prevent excessively large partitions (processing bottlenecks)

- Monitor partition distribution regularly

3. Consider Data Lifecycle

Align partitioning with data management:

- Hot data access patterns for recent partitions

- Cold data archival strategies for older partitions

- Retention policies based on partition boundaries

- Backup and recovery considerations

Implementation Guide: Step-by-Step Process

Phase 1: Assessment and Planning

- Analyze current performance metrics and bottlenecks

- Review query patterns and access frequencies

- Identify optimal partitioning dimensions based on data analysis

- Design partition key strategy aligned with business requirements

Phase 2: Testing and Validation

- Create test tables with proposed partitioning schemes

- Load representative data samples for testing

- Execute benchmark queries to measure performance improvements

- Validate partition distribution and resource utilization

Phase 3: Production Implementation

- Plan migration strategy with minimal downtime

- Implement gradual rollout across different tables

- Monitor performance metrics during transition

- Optimize based on real-world usage patterns

Advanced Partitioning Techniques

Dynamic Partitioning

Implement adaptive partitioning that evolves with your data:

- Automatic partition creation based on data characteristics

- Dynamic partition pruning for complex queries

- Self-optimizing partition schemes using machine learning

Hierarchical Partitioning

Create nested partition structures for complex datasets:

- Multi-level organization for better data locality

- Hierarchical compression strategies

- Optimized query routing through partition hierarchy

Monitoring and Optimization

Key Metrics to Track

- Partition size distribution across your cluster

- Query performance improvements after partitioning changes

- Resource utilization patterns during different operations

- Compression ratios within individual partitions

Continuous Optimization

- Regular partition analysis to identify optimization opportunities

- Query pattern evolution monitoring and adaptation

- Seasonal adjustment of partitioning strategies

- Performance regression detection and resolution

Common Pitfalls and How to Avoid Them

Over-Partitioning

Problem: Creating too many small partitions

Solution: Monitor partition sizes and consolidate when necessary

Poor Key Selection

Problem: Choosing partition keys that don’t align with query patterns

Solution: Thorough query analysis before implementation

Ignoring Data Skew

Problem: Uneven data distribution across partitions

Solution: Use hash-based or composite partitioning strategies

Why Choose ChistaDATA for Custom Partitioning?

At ChistaDATA, our ClickHouse experts have implemented custom partitioning strategies for organizations across industries:

Our Expertise Includes:

- Performance analysis and bottleneck identification

- Custom partitioning strategy design and implementation

- Migration planning and execution with zero downtime

- Ongoing optimization and performance monitoring

- Training and knowledge transfer for your team

Proven Results:

- Average 50-80% performance improvement in query execution

- 30-60% reduction in storage costs through optimized compression

- Simplified data management and operational overhead

- Enhanced scalability for growing data volumes

Get Started with Custom Partitioning Today

Don’t let suboptimal partitioning limit your ClickHouse performance. Contact ChistaDATA today to schedule your free custom partitioning assessment and discover how strategic data organization can transform your analytics capabilities.

Ready to optimize your ClickHouse deployment?

Further Reading

- Building a Custom ETL Tool: Technical Implementation for PostgreSQL to ClickHouse Data Movement

- Maximizing Real-Time Analytics Performance: How ClickHouse Revolutionizes Data Processing

- ClickHouse vs Snowflake: Choosing the Right Data Analytics Platform for Your Business

- Mastering Nested JOINs in ClickHouse: A Complete Guide to Embedding JOINs within JOINs

- Understanding the OpenTelemetry Collector: A Comprehensive Guide to Modern Telemetry Management

- CAP Theorem

You might also like:

- Strategic Considerations for Integrating ClickHouse with Row-based Systems: Balancing Performance and Architecture

- Optimizing ClickHouse Performance: Indexing, Query Execution, and Data Organization

- ClickHouse Security: How to set up TLS-SSL for ClickHouse Server

- ClickHouse Resource Safety: Implementing RAII and Destructors

- ClickHouse 23.8 LTS – Release Blog