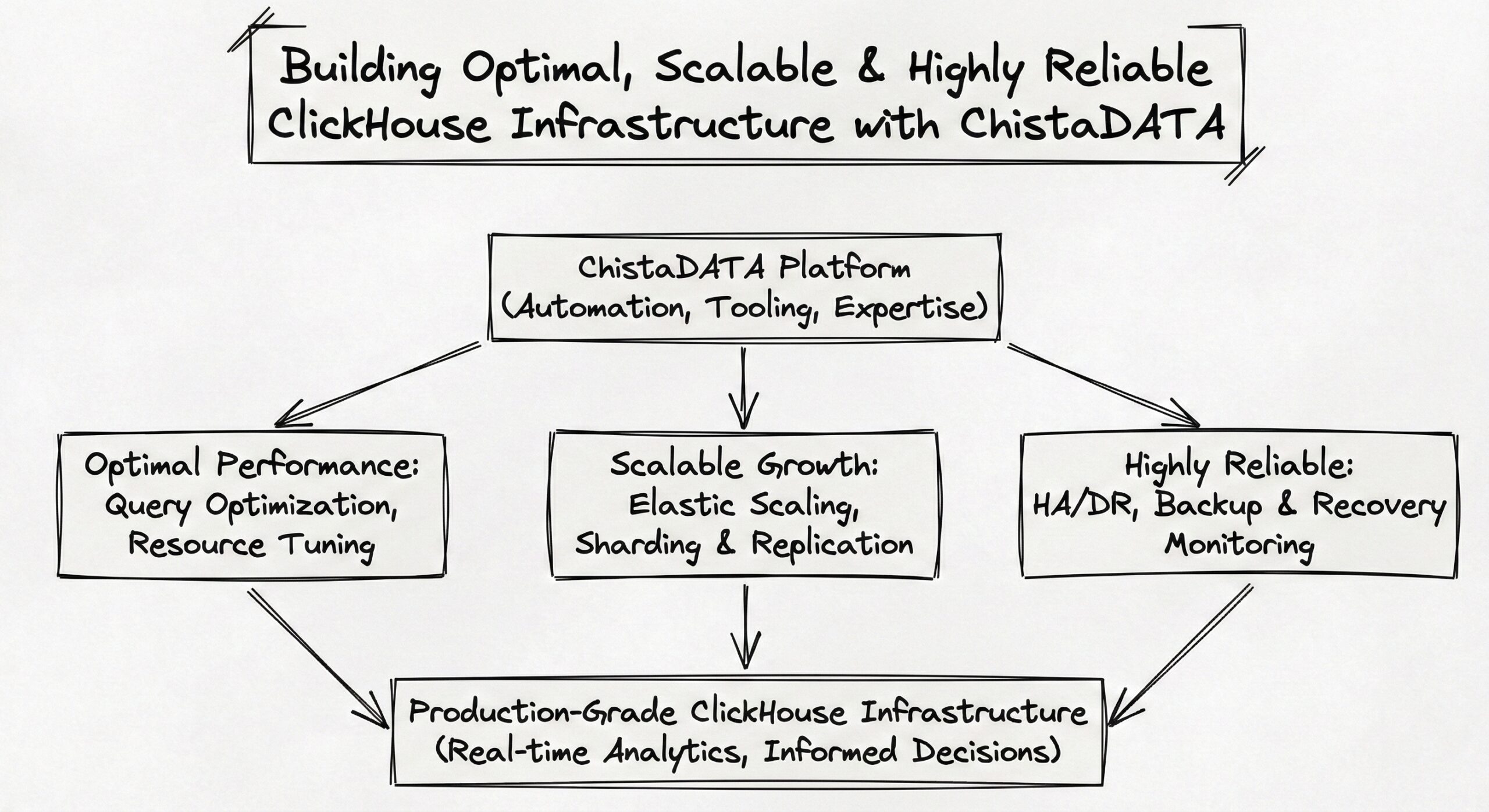

Building Optimal, Scalable and Highly Reliable ClickHouse Infrastructure with ChistaDATA Server

In today’s data-driven landscape, businesses rely heavily on real-time analytics to make informed decisions. ClickHouse, an open-source columnar database management system, has emerged as a powerful solution for high-performance online analytical processing (OLAP). Known for its lightning-fast query execution and efficient data compression, ClickHouse enables organizations to analyze massive datasets in seconds. However, while ClickHouse excels in performance, building and managing a production-grade ClickHouse infrastructure that is optimal, scalable, and highly reliable presents significant operational challenges.

This is where ChistaDATA steps in — a comprehensive platform designed to simplify and enhance ClickHouse infrastructure operations. By combining deep technical expertise, automation, and enterprise-grade tooling, ChistaDATA empowers organizations to unlock the full potential of ClickHouse without the burden of complex database administration. In this article, we explore how ChistaDATA enables the building of optimal, scalable, and highly reliable ClickHouse infrastructure operations.

Understanding the Operational Challenges of ClickHouse

ClickHouse was built for speed and efficiency. Its architecture allows for sub-second query responses even on petabyte-scale datasets, making it ideal for use cases such as real-time analytics, log processing, time-series analysis, and business intelligence. However, deploying ClickHouse in production requires careful planning and ongoing management across several critical dimensions:

- Cluster Setup and Configuration: Deploying a distributed ClickHouse cluster involves configuring ZooKeeper for coordination, setting up replication, sharding, and ensuring network topology aligns with data access patterns.

- Data Ingestion and Pipeline Management: Efficiently ingesting large volumes of data from diverse sources (Kafka, S3, APIs, etc.) while maintaining consistency and minimizing latency.

- Performance Tuning: Optimizing query performance through indexing strategies, materialized views, proper schema design, and resource allocation.

- Scalability and Elasticity: Scaling the cluster horizontally or vertically in response to growing data volumes and user demand.

- High Availability and Disaster Recovery: Ensuring data durability, node redundancy, automated failover, and backup strategies.

- Monitoring and Observability: Tracking system health, query performance, resource utilization, and anomaly detection.

- Security and Compliance: Implementing access controls, encryption, auditing, and compliance with regulatory standards.

Without proper tooling and expertise, these tasks can become overwhelming, leading to performance bottlenecks, downtime, and increased operational overhead.

The Role of ChistaDATA in ClickHouse Operations

ChistaDATA is not just another monitoring tool or managed service — it is a purpose-built platform that addresses the full lifecycle of ClickHouse infrastructure operations. It combines automation, intelligence, and best practices into a unified control plane that simplifies the complexity of managing large-scale ClickHouse deployments.

1. Automated Cluster Provisioning and Lifecycle Management

One of the most time-consuming aspects of ClickHouse operations is setting up and maintaining clusters. ChistaDATA streamlines this process through automated provisioning workflows. With a few clicks, users can deploy highly available ClickHouse clusters across multiple environments — on-premises, cloud, or hybrid.

The platform handles all underlying complexities, including:

- Automatic configuration of ClickHouse servers and ZooKeeper ensembles

- Network segmentation and firewall rules

- SSL/TLS encryption setup

- Role-based access control (RBAC) integration

- Initial schema bootstrapping

Moreover, ChistaDATA supports seamless upgrades, patching, and version management. When a new version of ClickHouse is released, the platform can automatically test and roll out updates with minimal disruption, ensuring that the infrastructure remains secure and up-to-date.

2. Intelligent Data Ingestion Pipelines

Efficient data ingestion is crucial for real-time analytics. ChistaDATA provides pre-built connectors and templates for popular data sources such as Apache Kafka, Amazon S3, Google Cloud Storage, and REST APIs. These connectors are optimized for high-throughput and low-latency ingestion into ClickHouse.

Key features include:

- Schema inference and evolution: Automatically detects incoming data schemas and suggests optimal table structures.

- Batch and stream processing modes: Supports both micro-batch ingestion and continuous streaming.

- Data validation and transformation: Built-in support for data cleansing, filtering, and enrichment using SQL or custom scripts.

- Backpressure handling: Prevents pipeline overloads during traffic spikes by dynamically adjusting ingestion rates.

By abstracting the complexity of ETL/ELT pipelines, ChistaDATA ensures that data flows reliably into ClickHouse with minimal manual intervention.

3. Performance Optimization at Scale

ClickHouse performance depends heavily on configuration and query patterns. ChistaDATA includes an intelligent query analyzer that monitors all incoming queries and identifies performance bottlenecks. It provides actionable recommendations such as:

- Rewriting inefficient queries

- Adding appropriate indexes (e.g., primary key, skip indexes)

- Partitioning strategies based on time or dimension

- Materialized view creation for pre-aggregation

- Memory and CPU tuning parameters

Additionally, ChistaDATA offers a query sandbox environment where developers can test and optimize queries before deploying them to production. This reduces the risk of long-running or resource-intensive queries impacting overall system performance.

The platform also leverages machine learning models to predict query execution times and resource consumption, enabling proactive capacity planning.

4. Scalability and Elastic Architecture

As data volumes grow, the ability to scale becomes critical. ChistaDATA enables both vertical and horizontal scaling of ClickHouse clusters with minimal downtime.

- Vertical scaling: Automatically increases CPU, memory, or disk resources on existing nodes based on workload demands.

- Horizontal scaling: Adds new shards or replicas to distribute load and improve fault tolerance.

ChistaDATA uses predictive analytics to forecast growth trends and trigger auto-scaling policies. For example, if daily data ingestion is increasing by 15% week-over-week, the system can recommend or initiate scaling actions before performance degrades.

Furthermore, the platform supports multi-region deployments for global applications, ensuring low-latency access and data locality compliance.

5. High Availability and Disaster Recovery

Downtime is unacceptable in mission-critical analytics systems. ChistaDATA implements a robust high availability (HA) architecture by default:

- Multi-node clusters with automatic failover

- Synchronous and asynchronous replication options

- Automated node recovery and re-synchronization

- Health checks and self-healing mechanisms

For disaster recovery, ChistaDATA provides:

- Incremental and full backups stored in secure cloud storage

- Point-in-time recovery (PITR) capabilities

- Cross-region backup replication

- Backup integrity verification

These features ensure that data is protected against hardware failures, human errors, and regional outages.

6. Comprehensive Monitoring and Observability

Visibility into system behavior is essential for maintaining reliability. ChistaDATA offers a unified monitoring dashboard that provides real-time insights into:

- Query performance metrics (latency, throughput, errors)

- Resource utilization (CPU, memory, disk I/O, network)

- Cluster health and node status

- Replication lag and ZooKeeper quorum stability

- User activity and access logs

Custom alerts can be configured based on thresholds, such as high query latency, low disk space, or failed queries. Notifications are delivered via email, Slack, or integrated IT service management tools like PagerDuty.

The platform also supports distributed tracing, allowing operators to follow a query’s journey across multiple nodes and identify bottlenecks in the execution pipeline.

7. Security and Governance

Enterprise-grade security is non-negotiable. ChistaDATA enforces strict security policies across the ClickHouse infrastructure:

- Authentication: Integration with LDAP, Active Directory, OAuth, and SAML for centralized identity management.

- Authorization: Fine-grained access control at the database, table, column, and row level.

- Encryption: Data encryption in transit (TLS) and at rest (AES-256).

- Auditing: Full audit trails of all data access and administrative actions.

- Compliance: Support for GDPR, HIPAA, SOC 2, and other regulatory frameworks.

Security configurations are standardized and enforced across all environments, reducing the risk of misconfigurations.

8. Cost Optimization and Resource Efficiency

Running large-scale ClickHouse clusters can be expensive, especially in the cloud. ChistaDATA helps optimize costs through:

- Right-sizing recommendations: Analyzes resource usage patterns and suggests optimal instance types.

- Storage tiering: Moves cold data to lower-cost storage (e.g., S3) while keeping hot data on fast SSDs.

- Query cost analysis: Identifies expensive queries and suggests optimizations to reduce compute usage.

- Idle resource detection: Flags underutilized nodes that can be downsized or decommissioned.

These capabilities ensure that organizations get the best performance per dollar spent.

Real-World Use Cases Enabled by ChistaDATA

Financial Services: Real-Time Fraud Detection

A global fintech company processes millions of transactions daily. Using ClickHouse powered by ChistaDATA, they built a real-time fraud detection engine that analyzes transaction patterns, device fingerprints, and behavioral signals. The platform’s low-latency queries enable sub-second risk scoring, while automated scaling handles peak loads during holiday shopping seasons.

E-Commerce: Personalized Product Recommendations

An e-commerce giant leverages ClickHouse to store user behavior data — clicks, searches, purchases — and generate real-time product recommendations. ChistaDATA manages the ingestion pipeline from Kafka, optimizes query performance for recommendation algorithms, and ensures high availability during flash sales events that drive traffic spikes.

IoT and Telemetry: Industrial Monitoring

A manufacturing firm collects sensor data from thousands of machines. ClickHouse stores time-series data with high compression ratios, and ChistaDATA automates the ingestion, retention policies, and rollup aggregations. Operators use dashboards to monitor equipment health and predict maintenance needs.

Digital Advertising: Campaign Analytics

An ad tech platform uses ClickHouse to analyze impression, click, and conversion data in real time. With ChistaDATA, they manage petabyte-scale datasets across multiple regions, run complex cohort analyses, and deliver self-service analytics to clients with strict SLAs.

Why Choose ChistaDATA Over DIY or Other Solutions?

While it’s possible to manage ClickHouse manually or with generic DevOps tools, doing so requires significant expertise and ongoing effort. Many organizations find themselves spending more time on database administration than on deriving business value from data.

ChistaDATA differentiates itself through:

- ClickHouse-specific expertise: Unlike generic database platforms, ChistaDATA is built by engineers who have deep experience with ClickHouse internals.

- End-to-end automation: From provisioning to backups, upgrades, and scaling, the platform minimizes manual intervention.

- Proactive intelligence: Uses AI/ML to predict issues before they occur and recommend optimizations.

- Enterprise readiness: Offers features like SSO, audit logging, and compliance certifications out of the box.

- Support and SLAs: Provides 24/7 expert support with guaranteed response times and uptime SLAs.

Compared to running ClickHouse on Kubernetes with open-source operators, ChistaDATA offers a more integrated, user-friendly, and operationally resilient experience.

Best Practices for Building Reliable ClickHouse Infrastructure with ChistaDATA

To maximize the benefits of ChistaDATA, organizations should follow these best practices:

- Start with a clear data model: Design your schema with query patterns in mind. Use MergeTree engines appropriately and avoid excessive indexing.

- Leverage automated backups: Enable daily incremental backups and test recovery procedures regularly.

- Monitor query performance: Use ChistaDATA’s query analyzer to identify and fix slow queries early.

- Implement data retention policies: Define TTL rules to automatically archive or delete old data.

- Use role-based access control: Limit permissions based on job function to reduce security risks.

- Plan for growth: Use forecasting tools to anticipate scaling needs and budget accordingly.

- Train your team: Ensure data engineers and analysts understand how to use ChistaDATA effectively.

The Future of ClickHouse Operations

As data volumes continue to explode, the need for scalable, reliable, and easy-to-manage analytics infrastructure will only grow. ClickHouse will remain at the forefront of real-time analytics, but its success in enterprise environments depends on how well it is operated.

ChistaDATA is positioned to be the operational backbone for ClickHouse, evolving with new features such as:

- AI-powered query optimization

- Serverless ClickHouse deployments

- Enhanced multi-tenancy support

- Tighter integration with data mesh architectures

- Advanced cost governance and chargeback reporting

These innovations will further reduce the operational burden and make ClickHouse accessible to a broader range of organizations.

Further Reading

- Data Strategy – How to Build One

- Understanding ClickHouse® Database: A Guide to Real-Time Analytics

- Data Fabric Solutions on Cloud Native Infrastructure with ClickHouse

- How ChistaDATA Partners with CTOs to Build Next-Generation Data Infrastructure

- Unlock Real-Time Insights: ChistaDATA’s Data Analytics Services

- Open Source Data Warehousing and Analytics

You might also like:

- Implementing Data Level Security on ClickHouse: Complete Technical Guide

- ClickHouse Replication: Understanding High Watermark Mechanism for Horizontal Scaling

- Partitioning in ClickHouse

- 10 Reasons to work with ChistaDATA for Real-time Analytics on ClickHouse

- How to Ingest Data from a Kafka Topic in ClickHouse