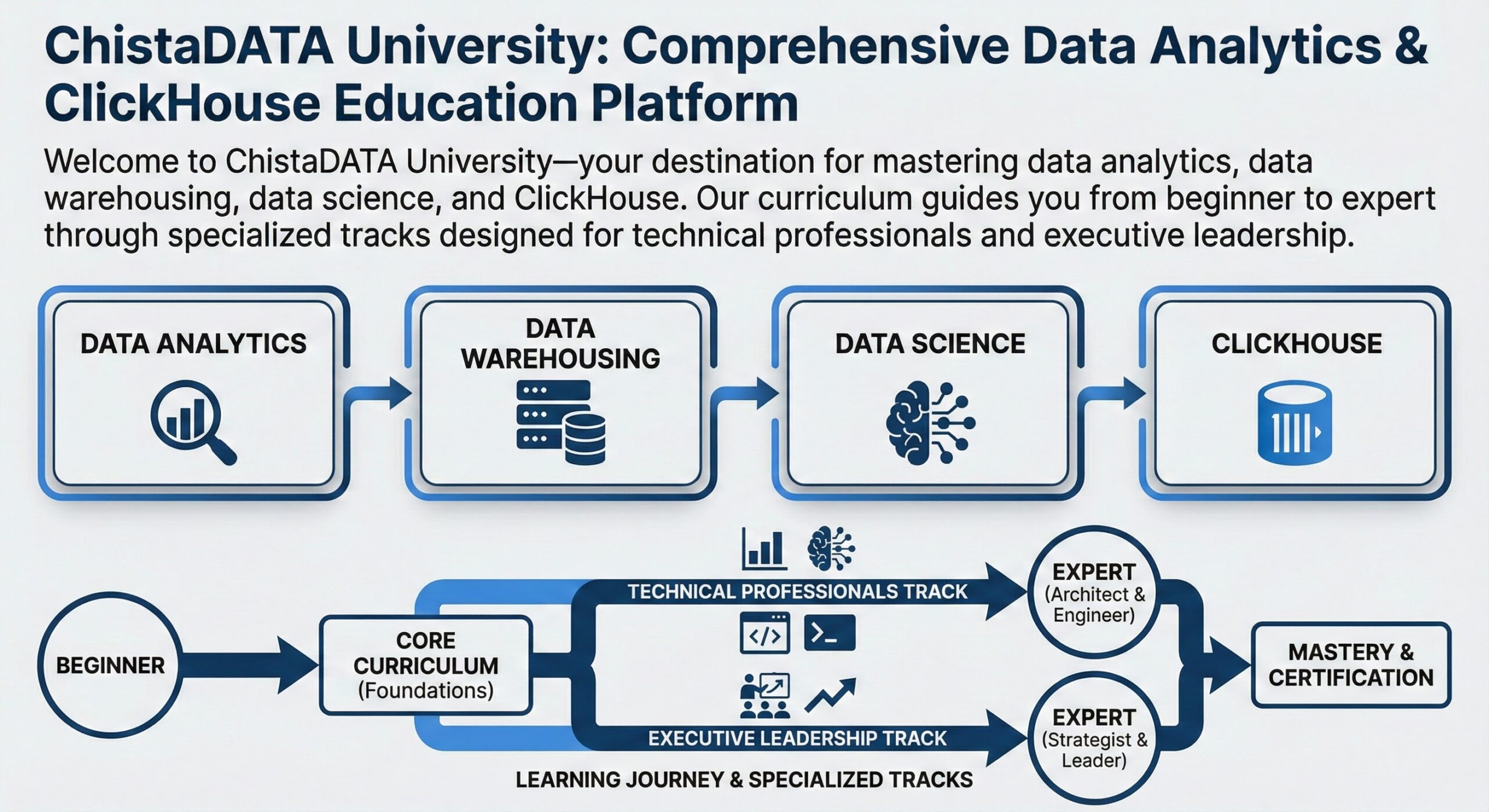

ChistaDATA University: Comprehensive Data Analytics & ClickHouse Education Platform

Welcome to ChistaDATA University—your destination for mastering data analytics, data warehousing, data science, and ClickHouse. Our curriculum guides you from beginner to expert through specialized tracks designed for technical professionals and executive leadership.

Learning Paths Overview

Our structured learning paths serve four distinct audiences:

- Beginners: Foundational concepts and hands-on introduction to data technologies

- Intermediates: Advanced techniques and practical application development

- Experts: Cutting-edge optimization, architecture, and troubleshooting

- CTOs/CXOs: Strategic decision-making, business value, and leadership perspectives

1. Data Analytics

For Beginners: Introduction to Data Analytics

Course Duration: 8 weeks | Learning Hours: 40 hours

Learning Objectives:

- Understand data analytics fundamentals and their role in business decision-making

- Master basic data collection, cleaning, and exploration techniques

- Identify patterns and trends in datasets

- Create clear visualizations to communicate insights

- Understand the data analytics lifecycle and workflow

Prerequisites: Basic computer skills, familiarity with spreadsheets

Course Outline:

- Introduction to Data Analytics—What is data analytics, types of analytics (descriptive, diagnostic, predictive, prescriptive)

- Data Ecosystem and Roles—Understanding modern data ecosystems, key players including data analysts, data scientists, and business analysts

- Data Types and Structures—Working with structured and unstructured data, file formats, data sources

- Excel for Data Analysis—Pivot tables, conditional formatting, basic functions, data visualization with charts

- Exploratory Data Analysis (EDA)—Statistical summaries, data distribution, identifying outliers

- Basic Data Visualization—Charts, graphs, dashboards, principles of effective visualization

- Introduction to Business Analytics—Using data to solve business problems, key metrics and KPIs

- Data Ethics and Privacy—Understanding data governance, responsible data usage

Key Tools Covered: Microsoft Excel, Google Sheets, basic visualization tools

Deliverables:

- 3 hands-on projects analyzing real-world datasets

- Final capstone project presenting business insights

- Course completion certificate

For Intermediates: Advanced Data Analytics

Course Duration: 10 weeks | Learning Hours: 60 hours

Learning Objectives:

- Perform advanced data analysis using programming languages

- Implement statistical analysis and hypothesis testing

- Build predictive models for business forecasting

- Create interactive dashboards and advanced visualizations

- Master data manipulation and transformation techniques

Prerequisites: Completion of beginner course or equivalent experience, basic programming knowledge

Course Outline:

- Python for Data Analysis—NumPy, Pandas, data manipulation

- Advanced Statistical Methods—Hypothesis testing, ANOVA, regression analysis

- Data Wrangling and Transformation—Cleaning complex datasets, feature engineering

- Advanced Visualization—Interactive dashboards with Tableau, Power BI

- Time Series Analysis—Trends, seasonality, forecasting methods

- A/B Testing and Experimentation—Designing experiments, statistical significance

- Predictive Analytics—Introduction to machine learning for analytics

- Web Analytics and Marketing Analytics—Digital analytics, customer segmentation

- SQL for Analytics—Complex queries, joins, window functions

- Analytics Project Management—Agile methodologies for analytics projects

Key Tools Covered: Python (Pandas, NumPy, Matplotlib, Seaborn), Tableau, Power BI, SQL

Deliverables:

- 5 industry-specific case study projects

- Interactive dashboard portfolio

- Predictive modeling project with business recommendations

For Experts: Enterprise Analytics Architecture

Course Duration: 12 weeks | Learning Hours: 80 hours

Learning Objectives:

- Design and implement enterprise-scale analytics architectures

- Build end-to-end analytics pipelines and data products

- Master advanced machine learning and AI integration

- Lead analytics transformation initiatives

- Optimize analytics infrastructure for performance and cost

Prerequisites: 2+ years of analytics experience, strong programming skills, SQL proficiency

Course Outline:

- Analytics Architecture Design—Scalable analytics platforms, microservices architecture

- Real-Time Analytics Systems—Stream processing, event-driven architectures

- Advanced Machine Learning for Analytics—Ensemble methods, deep learning applications

- MLOps and Model Deployment—CI/CD for analytics, model monitoring

- Big Data Analytics—Hadoop, Spark, distributed computing

- Cloud Analytics Platforms—AWS, Azure, GCP analytics services

- Data Product Development—Building self-service analytics platforms

- Advanced Visualization Engineering—Custom visualizations, D3.js, real-time dashboards

- Analytics Governance and Security—Data lineage, access controls, compliance

- Performance Optimization—Query optimization, infrastructure tuning, cost management

Key Tools Covered: Apache Spark, Kafka, Cloud platforms (AWS/Azure/GCP), Docker, Kubernetes, MLOps tools

Deliverables:

- Enterprise analytics architecture design document

- Production-grade real-time analytics pipeline

- Comprehensive analytics platform implementation

For CTOs/CXOs: Strategic Data Analytics Leadership

Course Duration: 6 weeks | Learning Hours: 30 hours (executive format)

Learning Objectives:

- Understand the strategic value of analytics for business transformation

- Make data-driven investment and resource allocation decisions

- Build and lead high-performance analytics teams

- Navigate the AI and analytics vendor landscape

- Communicate analytics value to boards and stakeholders

Prerequisites: C-level or senior leadership position, business strategy background

Course Outline:

- Analytics as Strategic Asset—Building competitive advantage through data

- ROI of Analytics Initiatives—Business case development, value measurement

- Building Analytics Organizations—Team structures, talent acquisition, skills development

- AI and Analytics Technology Landscape—Platform selection, build vs. buy decisions

- Data Governance and Ethics—Regulatory compliance, responsible AI

- Digital Transformation through Analytics—Change management, organizational adoption

- Analytics Metrics and KPIs—Measuring effectiveness and business impact

- Vendor Management and Partnerships—Selecting and managing technology partners

- Board-Level Communication—Presenting analytics strategies and investment cases

- Future of Analytics—Emerging trends, GenAI, agentic AI applications

Format: Executive masterclasses, Fortune 500 case studies, peer discussions

Deliverables:

- Strategic analytics roadmap for your organization

- Board-ready investment proposal

- Analytics maturity assessment

2. Data Warehousing

For Beginners: Data Warehousing Fundamentals

Course Duration: 8 weeks | Learning Hours: 45 hours

Learning Objectives:

- Understand data warehousing concepts and architecture

- Learn dimensional modeling techniques

- Master ETL (Extract, Transform, Load) processes

- Design fact and dimension tables

- Implement basic data warehouse solutions

Prerequisites: Basic SQL knowledge, understanding of relational databases

Course Outline:

- Introduction to Data Warehousing—What is a data warehouse, benefits, use cases

- Data Warehouse vs. Database vs. Data Lake—Understanding the differences

- Data Warehouse Architecture—Components, layers (staging, integration, presentation)

- Dimensional Modeling Concepts—Facts, dimensions, measures

- Star Schema Design—Building star schemas, best practices

- Snowflake Schema—When to use snowflake schemas, normalization

- ETL Fundamentals—Extract, transform, load processes

- Data Quality and Cleansing—Ensuring data integrity

- Slowly Changing Dimensions (SCD)—Types 1, 2, 3 implementations

- Introduction to Data Marts—Building departmental data marts

Key Tools Covered: SQL, basic ETL tools, database management systems

Deliverables:

- Dimensional model design for sample business scenario

- ETL pipeline implementation

- Simple data warehouse implementation project

For Intermediates: Advanced Data Warehousing

Course Duration: 10 weeks | Learning Hours: 65 hours

Learning Objectives:

- Design enterprise data warehouse architectures

- Implement complex ETL workflows and data pipelines

- Master advanced dimensional modeling techniques

- Optimize data warehouse performance

- Implement data governance frameworks

Prerequisites: Completion of beginner course, 1+ year database experience, advanced SQL

Course Outline:

- Enterprise Data Warehouse Architecture—Kimball vs. Inmon approaches, hybrid architectures

- Advanced ETL Development—Complex transformations, error handling, incremental loads

- Data Pipeline Orchestration—Apache Airflow, workflow automation

- Advanced Dimensional Modeling—Bridge tables, role-playing dimensions, factless fact tables

- Data Vault Modeling—Data Vault 2.0, hubs, links, satellites

- Partitioning and Indexing Strategies—Performance optimization techniques

- Data Warehouse Security—Access controls, encryption, compliance

- Change Data Capture (CDC)—Real-time data integration techniques

- Data Quality Management—Data profiling, validation rules, monitoring

- Cloud Data Warehousing—Snowflake, Redshift, BigQuery architectures

Key Tools Covered: Apache Airflow, Kafka, DBT, cloud data warehouses (Snowflake/Redshift/BigQuery)

Deliverables:

- Enterprise data warehouse architecture design

- Production-grade ETL pipeline implementation

- Data governance framework document

For Experts: Data Warehouse Optimization & Architecture

Course Duration: 12 weeks | Learning Hours: 75 hours

Learning Objectives:

- Architect multi-petabyte scale data warehouses

- Implement advanced performance tuning and optimization

- Design hybrid cloud and on-premises solutions

- Lead data warehouse modernization initiatives

- Master cost optimization strategies

Prerequisites: 3+ years data warehousing experience, architecture background

Course Outline:

- Massive-Scale Data Warehouse Architecture—Handling petabyte-scale data

- Advanced Query Optimization—Execution plans, materialized views, query rewriting

- Columnar Storage Engines—Understanding columnar databases for analytics

- Data Warehouse Automation (DWA)—Automated design and deployment

- Real-Time Data Warehousing—Lambda and Kappa architectures

- Multi-Cloud and Hybrid Architectures—Cross-cloud data integration

- Data Warehouse as a Service (DWaaS)—Modern cloud-native approaches

- Advanced Security and Compliance—GDPR, HIPAA, SOC 2 compliance

- Cost Optimization—Resource management, workload optimization

- Data Warehouse Migration—Legacy system modernization strategies

- Disaster Recovery and High Availability—Backup strategies, failover

- Future of Data Warehousing—Lakehouse architecture, data mesh concepts

Key Tools Covered: Advanced cloud platforms, Databricks, Snowflake advanced features, Terraform, monitoring tools

Deliverables:

- Multi-cloud data warehouse reference architecture

- Migration strategy document for legacy systems

- Cost optimization framework

For CTOs/CXOs: Data Warehouse Strategy & ROI

Course Duration: 4 weeks | Learning Hours: 20 hours

Learning Objectives:

- Evaluate data warehousing technology options

- Calculate ROI for data warehouse investments

- Make build vs. buy vs. cloud decisions

- Understand total cost of ownership (TCO)

- Align data warehouse strategy with business goals

Prerequisites: Executive leadership role, strategic planning experience

Course Outline:

- Strategic Value of Data Warehousing—Business intelligence enablement

- Technology Landscape—Modern data warehouse platform comparison

- Investment Decision Framework—ROI calculation, TCO analysis

- Cloud vs. On-Premises Strategy—Migration considerations, hybrid approaches

- Organizational Impact—Change management, skill requirements

- Vendor Selection Process—RFP development, evaluation criteria

- Data Warehouse Governance—Policies, standards, compliance

- Success Metrics—KPIs for data warehouse initiatives

Format: Executive seminars, vendor briefings, peer roundtables

Deliverables:

- Data warehouse strategy document

- Technology selection framework

- Business case presentation

3. Data Science

For Beginners: Data Science Foundation

Course Duration: 12 weeks | Learning Hours: 70 hours

Learning Objectives:

- Understand data science workflow and methodologies

- Learn Python programming for data science

- Master fundamental statistical concepts

- Build basic machine learning models

- Develop data storytelling and visualization skills

Prerequisites: Basic mathematics, logical thinking—no prior programming required

Course Outline:

- Introduction to Data Science—What is data science, applications, career paths

- Python Programming Fundamentals—Variables, data types, control structures, functions

- NumPy and Pandas—Array operations, DataFrame manipulation

- Data Visualization—Matplotlib, Seaborn, storytelling with data

- Descriptive Statistics—Mean, median, mode, variance, standard deviation

- Probability Basics—Probability distributions, conditional probability

- Exploratory Data Analysis—Data profiling, pattern recognition

- Introduction to Machine Learning—Supervised vs. unsupervised learning

- Linear Regression—Simple and multiple regression, evaluation metrics

- Classification Algorithms—Logistic regression, decision trees

- Clustering—K-means, hierarchical clustering

- Data Science Ethics—Bias, fairness, privacy considerations

Key Tools Covered: Python, Jupyter Notebooks, Pandas, NumPy, Scikit-learn, Matplotlib

Deliverables:

- Four hands-on data science projects

- Portfolio of data visualizations

- End-to-end machine learning project

For Intermediates: Applied Data Science

Course Duration: 14 weeks | Learning Hours: 90 hours

Learning Objectives:

- Build advanced machine learning models

- Master feature engineering and model selection

- Implement deep learning solutions

- Work with big data technologies

- Deploy machine learning models to production

Prerequisites: Completion of beginner course, Python proficiency, statistics foundation

Course Outline:

- Advanced Machine Learning Algorithms—Random forests, gradient boosting, SVM

- Feature Engineering—Feature selection, transformation, encoding

- Model Evaluation and Selection—Cross-validation, hyperparameter tuning

- Ensemble Methods—Bagging, boosting, stacking

- Time Series Forecasting—ARIMA, Prophet, neural networks for time series

- Natural Language Processing (NLP)—Text processing, sentiment analysis

- Deep Learning Fundamentals—Neural networks, backpropagation

- Computer Vision—Image classification, convolutional neural networks

- Big Data with PySpark—Distributed computing for data science

- MLOps Basics—Model deployment, monitoring, versioning

- Recommendation Systems—Collaborative filtering, content-based filtering

- Advanced Analytics—Survival analysis, causal inference

Key Tools Covered: Scikit-learn, TensorFlow/PyTorch, PySpark, MLflow, Docker

Deliverables:

- Six advanced data science projects across different domains

- Deployed machine learning application

- Research paper or blog post on a technical topic

For Experts: Research & Production Data Science

Course Duration: 16 weeks | Learning Hours: 100 hours

Learning Objectives:

- Conduct cutting-edge data science research

- Design and implement production-grade ML systems

- Master advanced deep learning architectures

- Lead data science teams and projects

- Contribute to the open-source data science community

Prerequisites: 2+ years data science experience, deep learning knowledge, production experience

Course Outline:

- Advanced Deep Learning—Transformers, attention mechanisms, BERT, GPT architectures

- Generative AI—GANs, VAEs, diffusion models

- Large Language Models (LLMs)—Fine-tuning, prompt engineering, RAG systems

- Reinforcement Learning—Q-learning, policy gradients, applications

- AutoML and Neural Architecture Search—Automated machine learning techniques

- Explainable AI (XAI)—Model interpretability, SHAP, LIME

- Production ML Systems—Scalable inference, A/B testing, monitoring

- ML Platform Engineering—Building internal ML platforms

- Advanced Optimization—Bayesian optimization, multi-objective optimization

- Causal Machine Learning—Causal inference, treatment effects

- Federated Learning—Privacy-preserving machine learning

- Research Methodology—Paper reading, experiment design, publication

Key Tools Covered: Advanced deep learning frameworks, Kubernetes, advanced MLOps tools, Ray, Kubeflow

Deliverables:

- Original research project or paper

- Production ML system architecture

- Open-source contribution or library

For CTOs/CXOs: Data Science Strategy & AI Leadership

Course Duration: 6 weeks | Learning Hours: 30 hours

Learning Objectives:

- Develop organizational AI and data science strategy

- Build and scale data science teams

- Evaluate AI technology investments

- Navigate AI ethics and governance

- Drive AI-powered business transformation

Prerequisites: C-level or VP-level role, business strategy background

Course Outline:

- AI Business Strategy—Competitive advantage through AI, use case identification

- Building Data Science Teams—Hiring, organizational structures, career paths

- AI Technology Landscape—Platforms, tools, vendor ecosystem

- AI Investment Framework—ROI calculation, resource allocation

- AI Governance and Ethics—Responsible AI, bias mitigation, regulatory compliance

- Data Science Operations (MLOps)—Production ML at scale

- AI Maturity Models—Assessing organizational readiness

- Change Management for AI—Cultural transformation, adoption strategies

- AI Product Strategy—Building AI-powered products

- Future of AI—GenAI, agentic AI, emerging trends

Format: Executive workshops, case studies, industry expert sessions

Deliverables:

- Organizational AI strategy roadmap

- Data science team structure proposal

- Board-level AI investment proposal

4. OLAP (Online Analytical Processing)

For Beginners: Introduction to OLAP

Course Duration: 6 weeks | Learning Hours: 35 hours

Learning Objectives:

- Understand OLAP concepts and multidimensional analysis

- Learn OLAP vs. OLTP differences

- Master OLAP operations (slice, dice, drill-down, roll-up)

- Work with OLAP cubes

- Build basic multidimensional reports

Prerequisites: Basic SQL knowledge, understanding of relational databases

Course Outline:

- Introduction to OLAP—What is OLAP, benefits, applications

- OLAP vs. OLTP—Key differences, when to use each

- Multidimensional Data Model—Dimensions, hierarchies, measures

- OLAP Operations—Slice, dice, drill-down, drill-up, pivot, roll-up

- OLAP Cube Concepts—Understanding cubes, cells, aggregations

- Types of OLAP Systems—MOLAP, ROLAP, HOLAP

- OLAP in Data Warehousing—Integration with data warehouses

- Basic MDX Queries—Introduction to Multidimensional Expressions

- OLAP Design Principles—Dimensional modeling for OLAP

- OLAP Tools Overview—Introduction to popular OLAP platforms

Key Tools Covered: Microsoft Excel (PowerPivot), basic OLAP browsers

Deliverables:

- OLAP cube design for a business scenario

- Multidimensional analysis report

- Basic cube implementation

For Intermediates: Advanced OLAP Design

Course Duration: 8 weeks | Learning Hours: 50 hours

Learning Objectives:

- Design high-performance OLAP cubes

- Implement complex dimensional hierarchies

- Master MDX query language

- Optimize OLAP cube performance

- Build production OLAP solutions

Prerequisites: Completion of beginner course, advanced SQL, data warehousing knowledge

Course Outline:

- OLAP Cube Design Best Practices—Granularity, aggregation strategy

- Complex Hierarchies—Parent-child, ragged, unbalanced hierarchies

- Advanced Dimensions—Many-to-many relationships, role-playing dimensions

- Calculated Members and Measures—Business logic implementation

- Advanced MDX—Complex calculations, time intelligence

- OLAP Cube Partitioning—Improving query performance

- Aggregation Design—Pre-aggregation strategies

- OLAP Security—Dimension security, cell-level security

- OLAP Processing—Full vs. incremental processing

- Performance Tuning—Query optimization, caching strategies

Key Tools Covered: Microsoft SQL Server Analysis Services, Oracle Essbase, IBM Cognos TM1

Deliverables:

- Production-ready OLAP cube implementation

- Performance optimization documentation

- MDX query library

For Experts: Enterprise OLAP Architecture

Course Duration: 10 weeks | Learning Hours: 60 hours

Learning Objectives:

- Architect enterprise-scale OLAP systems

- Design hybrid OLAP solutions

- Implement real-time OLAP

- Master advanced optimization techniques

- Lead OLAP modernization projects

Prerequisites: 2+ years OLAP experience, architecture expertise

Course Outline:

- Enterprise OLAP Architecture—Scalability, high availability

- Real-Time OLAP—In-memory OLAP, streaming integration

- Hybrid OLAP Designs—Combining MOLAP and ROLAP

- Massive-Scale Cube Design—Handling billions of rows

- Advanced Aggregation Algorithms—Custom aggregation functions

- OLAP on Modern Platforms—Cloud OLAP, ClickHouse for OLAP

- Write-Back and What-If Analysis—Interactive planning applications

- OLAP Performance Engineering—Advanced tuning techniques

- OLAP Integration—APIs, embedding OLAP in applications

- Migration Strategies—Legacy OLAP modernization

Key Tools Covered: Advanced OLAP platforms, ClickHouse, cloud OLAP services

Deliverables:

- Enterprise OLAP reference architecture

- Real-time OLAP implementation

- Migration playbook

For CTOs/CXOs: OLAP Strategy & Business Value

Course Duration: 3 weeks | Learning Hours: 15 hours

Learning Objectives:

- Understand the strategic value of OLAP for business intelligence

- Evaluate OLAP technology options

- Calculate OLAP investment ROI

- Make architectural decisions

- Align OLAP with business goals

Prerequisites: Executive leadership role

Course Outline:

- OLAP Business Value—Faster decision-making, business agility

- OLAP Technology Landscape—Platform comparison, selection criteria

- Cloud vs. On-Premises OLAP—Cost-benefit analysis

- OLAP Investment Framework—TCO, ROI calculation

- Self-Service BI with OLAP—Empowering business users

- OLAP Success Metrics—Measuring adoption and value

Format: Executive briefings, vendor demonstrations

Deliverables:

- OLAP technology selection framework

- Business case presentation

5. SQL for Data Analytics

For Beginners: SQL Fundamentals for Analytics

Course Duration: 8 weeks | Learning Hours: 45 hours

Learning Objectives:

- Master fundamental SQL syntax and commands

- Write queries to analyze data

- Join multiple tables for comprehensive analysis

- Aggregate and group data effectively

- Create reports using SQL

Prerequisites: Basic computer skills, logical thinking

Course Outline:

- Introduction to SQL—What is SQL, relational databases, SQL flavors

- Basic SELECT Statements—Retrieving data, column selection

- Filtering Data with WHERE—Conditions, operators, logical expressions

- Sorting and Limiting Results—ORDER BY, LIMIT, TOP

- Aggregate Functions—COUNT, SUM, AVG, MIN, MAX

- GROUP BY and HAVING—Grouping data, filtering groups

- Introduction to JOINs—INNER JOIN, LEFT JOIN, RIGHT JOIN

- Working with Dates—Date functions, time-based analysis

- Subqueries—Nested queries, correlated subqueries

- Data Transformation—CASE statements, string functions

Key Tools Covered: PostgreSQL, MySQL, SQL Server

Deliverables:

- 10 SQL query challenges completed

- Analytics report generated from database

- SQL query portfolio

For Intermediates: Advanced SQL for Analytics

Course Duration: 10 weeks | Learning Hours: 60 hours

Learning Objectives:

- Master advanced SQL techniques for complex analysis

- Implement window functions for analytical queries

- Write CTEs (Common Table Expressions) for readable queries

- Optimize query performance

- Build data transformation pipelines using SQL

Prerequisites: Strong SQL foundation, completion of beginner course

Course Outline:

- Advanced Joins—Self-joins, cross joins, multiple table joins

- Window Functions—ROW_NUMBER, RANK, DENSE_RANK, NTILE

- Analytical Window Functions—LEAD, LAG, FIRST_VALUE, LAST_VALUE

- Common Table Expressions (CTEs)—WITH clause, recursive CTEs

- Advanced Aggregations—ROLLUP, CUBE, GROUPING SETS

- Set Operations—UNION, INTERSECT, EXCEPT

- Pivoting and Unpivoting Data—Dynamic pivots, cross-tabulation

- Text Analysis in SQL—String functions, pattern matching, regex

- Complex Calculations—Mathematical operations, running totals, moving averages

- Query Optimization Basics—Understanding execution plans

Key Tools Covered: Advanced SQL on PostgreSQL, SQL Server, Snowflake

Deliverables:

- Advanced analytics project using complex SQL

- Query optimization case study

- Reusable SQL function library

For Experts: SQL Performance Optimization

Course Duration: 8 weeks | Learning Hours: 50 hours

Learning Objectives:

- Analyze and optimize slow SQL queries

- Design efficient database schemas for analytics

- Master indexing strategies

- Implement advanced performance tuning

- Scale SQL for big data workloads

Prerequisites: 2+ years SQL experience, database administration knowledge

Course Outline:

- Query Execution Plans—Reading and analyzing EXPLAIN plans

- Advanced Indexing—B-tree, bitmap, partial indexes

- Query Rewriting—Optimization techniques, anti-patterns

- Partitioning Strategies—Table partitioning for performance

- Materialized Views—Pre-computing results for speed

- Statistics and Query Optimizer—Database statistics, optimizer hints

- Parallel Query Execution—Leveraging parallelism

- Connection Pooling and Resource Management—Scalability techniques

- SQL on Distributed Systems—Sharding, distributed queries

- Monitoring and Troubleshooting—Performance monitoring tools

Key Tools Covered: Query analyzers, database profilers, monitoring tools

Deliverables:

- Query optimization portfolio

- Performance tuning playbook

- Database schema optimization recommendations

For CTOs/CXOs: SQL Strategy for Analytics

Course Duration: 2 weeks | Learning Hours: 10 hours

Learning Objectives:

- Understand SQL’s role in modern analytics

- Evaluate SQL vs. NoSQL for analytics workloads

- Make technology decisions for analytics infrastructure

- Understand cost implications of query performance

Prerequisites: Executive leadership role

Course Outline:

- SQL in Modern Data Stack—SQL’s evolving role

- SQL Technology Landscape—PostgreSQL, MySQL, cloud SQL services

- SQL vs. NoSQL for Analytics—When to use each

- Cost of Poor Query Performance—Business impact

- Building SQL Expertise in Teams—Training and development

Format: Executive overviews, technical briefings

Deliverables:

- Technology selection criteria

- Team development plan

6. SQL Performance Optimization

For Intermediates: SQL Query Tuning

Course Duration: 6 weeks | Learning Hours: 40 hours

Learning Objectives:

- Identify and fix slow queries

- Implement effective indexing strategies

- Optimize JOIN operations

- Reduce query complexity

- Monitor query performance

Prerequisites: Strong SQL knowledge, understanding of database concepts

Course Outline:

- Performance Fundamentals—Understanding query cost

- Indexing Best Practices—When and how to create indexes

- Query Execution Plans—Reading EXPLAIN output

- Optimizing SELECT Statements—Column selection, avoiding SELECT *

- JOIN Optimization—JOIN order, JOIN types

- Subquery Optimization—Converting to JOINs, EXISTS vs. IN

- Aggregate Query Optimization—Efficient GROUP BY

- Avoiding Common Pitfalls—Anti-patterns, bad practices

- Database Configuration—Memory, cache settings

- Performance Monitoring—Tools and techniques

Key Tools Covered: EXPLAIN, database profilers, monitoring tools

Deliverables:

- Before/after optimization case studies

- Query optimization checklist

- Performance monitoring dashboard

For Experts: Advanced SQL Performance Engineering

Course Duration: 8 weeks | Learning Hours: 55 hours

Learning Objectives:

- Master advanced optimization techniques

- Design queries for massive scale

- Implement sophisticated caching strategies

- Tune database systems for analytics workloads

- Troubleshoot complex performance issues

Prerequisites: 3+ years database performance experience

Course Outline:

- Advanced Execution Plan Analysis—Deep dive into optimizer internals

- Advanced Indexing—Covering indexes, filtered indexes, expression indexes

- Partitioning and Sharding—Horizontal and vertical partitioning

- Query Hints and Optimizer Control—Forcing execution plans

- Materialized Views and Summary Tables—Strategic pre-aggregation

- Columnar Storage Optimization—ClickHouse, Redshift techniques

- Parallel Query Processing—Multi-core optimization

- Memory Management—Buffer pools, cache tuning

- I/O Optimization—Reducing disk reads, SSD optimization

- Advanced Monitoring and Diagnostics—System catalog queries, wait statistics

Key Tools Covered: Advanced profiling tools, system DMVs, performance schema

Deliverables:

- Enterprise-scale optimization project

- Performance engineering playbook

- Custom monitoring solution

For CTOs/CXOs: SQL Performance Business Impact

Course Duration: 1 week | Learning Hours: 5 hours

Learning Objectives:

- Understand the business impact of query performance

- Evaluate infrastructure investments for performance gains

- Set performance SLAs

- Build high-performance database teams

Prerequisites: Executive leadership role

Course Outline:

- Cost of Slow Queries—Revenue impact, user experience

- Performance Infrastructure Investment—Hardware, cloud services

- Performance SLAs—Setting and measuring targets

- Team Capability Building—Developing performance expertise

Format: Executive briefing

Deliverables:

- Performance investment framework

- SLA definition

7. Statistics for Data Analytics

For Beginners: Statistical Foundations

Course Duration: 10 weeks | Learning Hours: 55 hours

Learning Objectives:

- Understand core statistical concepts

- Perform descriptive statistical analysis

- Learn probability theory basics

- Conduct hypothesis testing

- Apply statistics to business problems

Prerequisites: High school mathematics

Course Outline:

- Introduction to Statistics—Types of statistics, applications in data analytics

- Data Types and Measurement—Nominal, ordinal, interval, ratio

- Descriptive Statistics—Mean, median, mode, range

- Measures of Dispersion—Variance, standard deviation, IQR

- Data Visualization—Histograms, box plots, scatter plots

- Probability Fundamentals—Probability rules, conditional probability

- Probability Distributions—Normal, binomial, Poisson distributions

- Sampling and Sampling Distributions—Central Limit Theorem

- Confidence Intervals—Estimation, margin of error

- Hypothesis Testing—Null hypothesis, p-values, Type I/II errors

Key Tools Covered: Excel, Python (SciPy, StatsModels)

Deliverables:

- Statistical analysis reports

- Business insights from statistical tests

- Visualization portfolio

For Intermediates: Applied Statistics

Course Duration: 12 weeks | Learning Hours: 65 hours

Learning Objectives:

- Master regression analysis

- Conduct A/B testing and experimentation

- Perform multivariate analysis

- Apply statistical modeling

- Interpret statistical results for business

Prerequisites: Beginner course completion, basic programming

Course Outline:

- Correlation and Causation—Understanding relationships

- Simple Linear Regression—Model fitting, interpretation

- Multiple Regression—Multiple predictors, multicollinearity

- Logistic Regression—Binary outcomes, odds ratios

- ANOVA—Comparing multiple groups

- Chi-Square Tests—Categorical data analysis

- Time Series Analysis—Trends, seasonality, forecasting

- A/B Testing—Experimental design, statistical significance

- Statistical Power and Sample Size—Planning experiments

- Multivariate Analysis—Factor analysis, principal components

Key Tools Covered: R, Python (Pandas, StatsModels, SciPy)

Deliverables:

- A/B test analysis report

- Regression modeling project

- Business forecasting model

For Experts: Advanced Statistical Methods

Course Duration: 14 weeks | Learning Hours: 75 hours

Learning Objectives:

- Master advanced statistical techniques

- Implement Bayesian statistics

- Conduct causal inference analysis

- Build sophisticated statistical models

- Apply cutting-edge statistical methods

Prerequisites: Strong statistics foundation, 2+ years analytics experience

Course Outline:

- Generalized Linear Models (GLMs)—Poisson, negative binomial regression

- Mixed Effects Models—Hierarchical models, random effects

- Survival Analysis—Kaplan-Meier, Cox proportional hazards

- Bayesian Statistics—Bayesian inference, MCMC methods

- Causal Inference—Propensity score matching, instrumental variables

- Time Series Econometrics—ARIMA, GARCH models

- Multivariate Time Series—VAR models, cointegration

- Non-Parametric Methods—Kernel density estimation, bootstrap

- Experimental Design—Factorial designs, response surface methodology

- Statistical Machine Learning—Regularization, cross-validation

Key Tools Covered: R (advanced packages), Python (PyMC3, Stan), BUGS/JAGS

Deliverables:

- Advanced analytics research project

- Causal analysis case study

- Methodological white paper

For CTOs/CXOs: Statistics for Decision Making

Course Duration: 4 weeks | Learning Hours: 20 hours

Learning Objectives:

- Interpret statistical results correctly

- Avoid common statistical fallacies

- Make data-driven decisions with confidence

- Evaluate statistical claims

- Set up rigorous testing frameworks

Prerequisites: Executive leadership role

Course Outline:

- Statistics in Business Context—Why statistics matters

- Understanding Statistical Significance—Avoiding misinterpretation

- Statistical vs. Practical Significance—Business relevance

- Common Statistical Fallacies—Correlation vs. causation, p-hacking

- Building Testing Culture—A/B testing at scale

- Evaluating Data Science Teams—Statistical rigor

Format: Executive seminars with case studies

Deliverables:

- Decision-making framework

- Testing culture guidelines

8. Advanced Statistics for Data Scientists

For Experts: Advanced Statistical Theory

Course Duration: 16 weeks | Learning Hours: 85 hours

Learning Objectives:

- Master advanced statistical theory

- Implement state-of-the-art statistical methods

- Conduct rigorous statistical research

- Apply advanced probability theory

- Develop custom statistical models

Prerequisites: Graduate-level statistics, strong mathematical background

Course Outline:

- Advanced Probability Theory—Measure theory, stochastic processes

- Asymptotic Statistics—Consistency, efficiency, asymptotic distributions

- Maximum Likelihood Theory—Properties of MLE, information theory

- Bayesian Inference—Conjugate priors, Bayesian computation

- Statistical Decision Theory—Loss functions, minimax, Bayes estimators

- High-Dimensional Statistics—Sparse models, regularization theory

- Semi-Parametric Methods—Partial likelihood, empirical processes

- Survival Analysis Theory—Counting processes, martingales

- Spatial Statistics—Geostatistics, spatial point processes

- Computational Statistics—Monte Carlo methods, EM algorithm

- Statistical Learning Theory—VC dimension, PAC learning

- Resampling Methods—Bootstrap theory, permutation tests

Key Tools Covered: R (advanced statistical packages), Python, Julia, Stan

Deliverables:

- Research paper or thesis

- Novel statistical methodology

- Open-source statistical package

9. ClickHouse

For Beginners: Introduction to ClickHouse

Course Duration: 6 weeks | Learning Hours: 35 hours

Learning Objectives:

- Understand ClickHouse architecture and use cases

- Install and configure ClickHouse

- Write basic ClickHouse SQL queries

- Load data into ClickHouse

- Create tables and understand table engines

Prerequisites: Basic SQL knowledge, Linux familiarity

Course Outline:

- Introduction to ClickHouse—What is ClickHouse, OLAP databases, use cases

- ClickHouse Architecture Overview—Columnar storage, why ClickHouse is fast

- Installation and Setup—Installation methods, server configuration

- ClickHouse SQL Basics—SELECT queries, data types

- Table Engines Introduction—MergeTree family, Log engines

- Data Ingestion—INSERT statements, bulk loading, CSV imports

- Basic Query Operations—Filtering, aggregations, GROUP BY

- Working with Functions—String, date, and mathematical functions

- Ordering and Primary Keys—ORDER BY clause, primary key concepts

- Basic Performance Concepts—Why queries are fast, columnar advantages

Key Tools Covered: ClickHouse client, DBeaver, Grafana

Deliverables:

- ClickHouse instance setup

- Data loading project

- Basic analytics queries

For Intermediates: ClickHouse Development

Course Duration: 8 weeks | Learning Hours: 50 hours

Learning Objectives:

- Master ClickHouse SQL dialect and functions

- Design optimal table schemas

- Build data pipelines to ClickHouse

- Work with materialized views

- Optimize query performance

Prerequisites: Completion of beginner course, strong SQL skills

Course Outline:

- Advanced Table Engines—ReplicatedMergeTree, CollapsingMergeTree, ReplacingMergeTree

- Schema Design Best Practices—Choosing ORDER BY keys, partitioning strategies

- Advanced SQL Features—Array functions, nested structures, WITH clauses

- Materialized Views—Creating and using materialized views for aggregations

- Data Pipelines—Kafka integration, real-time data ingestion

- JOINs in ClickHouse—JOIN types, optimization techniques

- Dictionaries—External dictionaries for data enrichment

- Data Compression—Codecs and compression algorithms

- Query Optimization Basics—Using EXPLAIN, identifying bottlenecks

- Monitoring and Observability—System tables, query logs

Key Tools Covered: Kafka, vector databases, Grafana, Prometheus

Deliverables:

- Production-ready schema design

- Real-time data pipeline

- Optimized materialized view architecture

For Experts: ClickHouse Advanced Topics

Course Duration: 10 weeks | Learning Hours: 65 hours

Learning Objectives:

- Master ClickHouse internals and architecture

- Build distributed ClickHouse clusters

- Perform advanced performance tuning

- Troubleshoot complex ClickHouse issues

- Design massive-scale ClickHouse architectures

Prerequisites: 1+ year ClickHouse experience, system administration skills

Course Outline:

- ClickHouse Internals Deep Dive—Storage layer, MergeTree implementation

- Distributed Architecture—Sharding, replication, ClickHouse Keeper

- Advanced Query Optimization—PREWHERE, projections, skip indexes

- Performance Engineering—Hardware optimization, memory tuning

- Write Performance Optimization—Batch inserts, async inserts, buffer tables

- Merge Process Optimization—Background merges, merge settings

- Advanced Materialized Views—Chained views, complex transformations

- Security and Access Control—Users, roles, row-level security

- Backup and Disaster Recovery—Backup strategies, point-in-time recovery

- Kubernetes Deployment—Running ClickHouse on Kubernetes, operators

Key Tools Covered: ClickHouse Keeper, Kubernetes, advanced monitoring tools

Deliverables:

- Multi-region distributed cluster design

- Performance optimization case study

- Production operations playbook

For CTOs/CXOs: ClickHouse Business Value

Course Duration: 2 weeks | Learning Hours: 10 hours

Learning Objectives:

- Understand ClickHouse business value and ROI

- Evaluate ClickHouse for your organization

- Make informed build vs. buy vs. cloud decisions

- Understand total cost of ownership

- Plan your ClickHouse adoption strategy

Prerequisites: Executive leadership role

Course Outline:

- ClickHouse Business Case—Real-time analytics value, customer examples

- ClickHouse vs. Alternatives—Comparison with Snowflake, BigQuery, Redshift

- Deployment Options—Self-hosted vs. ClickHouse Cloud

- TCO Analysis—Cost modeling, infrastructure requirements

- Implementation Strategy—Migration planning, team requirements

- Success Metrics—Measuring ClickHouse adoption and value

Format: Executive briefings, customer case studies

Deliverables:

- Technology evaluation framework

- ROI analysis

- Implementation roadmap

10. Advanced ClickHouse Topics

For Experts: ClickHouse Specialized Applications

Course Duration: 12 weeks | Learning Hours: 75 hours

Learning Objectives:

- Build real-time analytics applications with ClickHouse

- Implement advanced data modeling patterns

- Design for massive scale (billions to trillions of rows)

- Master advanced features and capabilities

- Solve complex analytical problems

Prerequisites: Strong ClickHouse foundation, production experience

Course Outline:

- Real-Time Analytics Architecture—Streaming ingestion, low-latency queries

- Advanced Data Modeling—Time-series optimization, event data modeling

- Window Functions and Advanced SQL—Complex analytical queries

- Geospatial Analytics—Working with geographic data

- Machine Learning Integration—ML models in ClickHouse

- Advanced Aggregation Techniques—Custom aggregation functions

- Query Result Caching—Optimizing repetitive queries

- External Data Integration—S3, HDFS, table functions

- Advanced Security Patterns—Multi-tenancy, data isolation

- Cost Optimization—Resource management, tiered storage

- Observability and Monitoring—Production monitoring strategies

- ClickHouse at Scale—Managing petabyte-scale deployments

Key Tools Covered: Advanced ClickHouse features, monitoring platforms, integration tools

Deliverables:

- Real-time analytics application

- Advanced data model implementation

- Scalability architecture document

11. ClickHouse Performance Optimization and Tuning

For Experts: ClickHouse Performance Engineering

Course Duration: 8 weeks | Learning Hours: 55 hours

Learning Objectives:

- Master ClickHouse performance optimization techniques

- Tune ClickHouse for specific workloads

- Optimize hardware and system configuration

- Implement advanced indexing strategies

- Achieve sub-second query performance

Prerequisites: Strong ClickHouse experience, systems engineering background

Course Outline:

- Performance Fundamentals—Understanding ClickHouse performance characteristics

- Query Optimization Techniques—PREWHERE, column pruning, projection optimization

- Indexing Strategies—Primary keys, skip indexes, bloom filters

- Projections—Designing projections for query patterns

- Compression Optimization—Codec selection, compression ratios

- Memory Configuration—Memory limits, buffer settings

- CPU Optimization—Thread settings, parallelism

- Disk I/O Optimization—Storage configuration, RAID, NVMe

- Merge Tuning—Background merge optimization

- Network Optimization—Distributed query optimization

- Workload-Specific Tuning—Dashboards, ad-hoc queries, ETL

- Benchmarking and Testing—Performance testing methodologies

Key Tools Covered: EXPLAIN, system tables, profiling tools, benchmarking frameworks

Deliverables:

- Performance optimization playbook

- Tuning parameters reference guide

- Before/after optimization case studies

12. ClickHouse Performance Troubleshooting

For Experts: ClickHouse Issue Resolution

Course Duration: 6 weeks | Learning Hours: 40 hours

Learning Objectives:

- Diagnose ClickHouse performance issues

- Troubleshoot slow queries

- Resolve cluster problems

- Fix data ingestion issues

- Implement preventive measures

Prerequisites: Production ClickHouse experience, troubleshooting skills

Course Outline:

- Troubleshooting Methodology—Systematic problem diagnosis

- Query Performance Issues—Identifying and fixing slow queries

- Memory Problems—OOM errors, memory leak diagnosis

- Disk Space Issues—Managing disk usage, partition problems

- Replication Troubleshooting—Replication lag, stuck replicas

- “Too Many Parts” Error—Causes and solutions

- Merge Problems—Stuck merges, merge performance

- Network Issues—Distributed query problems

- Data Consistency Issues—Detecting and fixing inconsistencies

- Using System Tables for Diagnosis—system.query_log, system.errors

- ClickHouse Logs Analysis—Reading and interpreting logs

- Crash Loop Debugging—Kubernetes-specific issues

Key Tools Covered: System tables, log analyzers, diagnostic queries

Deliverables:

- Troubleshooting playbook

- Diagnostic query library

- Runbook for common issues

13. ClickHouse Internals

For Experts: ClickHouse Architecture Deep Dive

Course Duration: 10 weeks | Learning Hours: 65 hours

Learning Objectives:

- Master ClickHouse internal architecture

- Understand storage layer implementation

- Learn query execution engine details

- Explore distributed system internals

- Contribute to ClickHouse development

Prerequisites: Strong systems programming background, C++ knowledge helpful

Course Outline:

- ClickHouse Architecture Layers—Query processing, storage, integration

- Columnar Storage Implementation—How columnar storage works

- MergeTree Engine Internals—LSM-tree architecture, parts, merges

- Vectorized Query Execution—SIMD, CPU optimization

- Query Pipeline and Operators—Query execution stages

- Block Processing—Understanding blocks and chunks

- Compression Algorithms—LZ4, ZSTD, Delta, Gorilla codecs

- Primary Index Implementation—Sparse index, granules

- Distributed Query Execution—Two-stage aggregation, shuffling

- Replication Protocol—ClickHouse Keeper, Raft consensus

- Memory Management—Allocators, memory pools

- Code Reading and Contributing—Navigating ClickHouse source code

Key Tools Covered: ClickHouse source code, debugging tools, profilers

Deliverables:

- Technical architecture documentation

- Source code analysis project

- Contribution to ClickHouse (bug fix or feature)

14. ClickHouse Troubleshooting

For Intermediates: ClickHouse Operations

Course Duration: 6 weeks | Learning Hours: 35 hours

Learning Objectives:

- Perform routine ClickHouse administration

- Monitor ClickHouse health

- Handle common operational issues

- Implement backup and recovery

- Maintain cluster stability

Prerequisites: Basic ClickHouse knowledge, Linux administration

Course Outline:

- ClickHouse Monitoring Basics—Key metrics, monitoring setup

- System Tables for Operations—system.parts, system.merges, system.replicas

- Common Error Messages—Understanding and resolving

- Backup Strategies—Backup methods, testing restores

- Upgrade Procedures—Safe upgrade practices

- User Management—Creating users, managing permissions

- Cluster Health Checks—Monitoring replication, detecting issues

- Resource Management—Managing CPU, memory, disk usage

- Query Management—Killing queries, setting limits

- Operational Best Practices—Production checklist

Key Tools Covered: Grafana, Prometheus, system tables, diagnostic scripts

Deliverables:

- Monitoring dashboard

- Operations runbook

- Backup and recovery procedures

For Experts: Advanced ClickHouse Troubleshooting

Course Duration: 8 weeks | Learning Hours: 50 hours

Learning Objectives:

- Diagnose complex production issues

- Perform root cause analysis

- Resolve cluster-wide problems

- Optimize problematic workloads

- Implement preventive monitoring

Prerequisites: Production ClickHouse operations experience

Course Outline:

- Advanced Diagnostic Techniques—Stack traces, profiling

- Cluster-Wide Issues—Resolving distributed system problems

- Data Corruption Detection and Recovery—Identifying and fixing data issues

- Performance Regression Analysis—Identifying performance degradation

- Kubernetes Troubleshooting—Crash loops, networking issues

- Resource Exhaustion—Handling memory, CPU, disk exhaustion

- Query Optimization in Production—Fixing problematic queries live

- Replication Conflict Resolution—Dealing with replication issues

- Emergency Procedures—Disaster recovery, data loss scenarios

- Preventive Measures—Alerting, capacity planning

Key Tools Covered: Advanced diagnostic tools, profilers, tracing tools

Deliverables:

- Advanced troubleshooting playbook

- Root cause analysis templates

- Preventive monitoring framework

Course Delivery Methods

Instructor-Led Training: Live virtual sessions with expert instructors, real-time Q&A, collaborative learning

Self-Paced Learning: Video lectures, interactive labs, downloadable resources—learn at your own pace

Hands-On Labs: Cloud-based lab environments, real-world datasets, practical exercises

Capstone Projects: Industry-relevant projects, portfolio development, practical application

Certification: Industry-recognized certificates upon completion to demonstrate expertise

Support and Resources

- 24/7 Learning Support: Technical assistance, course materials, discussion forums

- Career Services: Resume reviews, interview preparation, job placement assistance

- Alumni Network: Connect with graduates, mentorship opportunities, ongoing learning community

- Continuous Updates: Courses updated regularly with latest technologies and best practices

Enrollment Information

Prerequisites Assessment: Free skills assessment to determine your appropriate starting level

Flexible Scheduling: Multiple batch starts per month, weekend and evening options available

Corporate Training: Customized programs for organizations, volume discounts available

Free Trial: Sample the first module free for all courses

Contact ChistaDATA University for program registration and enrolment

Email: info@chistadata.com

Phone: (844)395-5717

Start your journey to data mastery today with ChistaDATA University—Where Data Professionals Are Made!

Further Reading

- ChistaDATA Break Fix Engineering Services and Support for ClickHouse

- Data Strategy – How to Build One

- How ChistaDATA Partners with CTOs to Build Next-Generation Data Infrastructure

- ChistaDATA ClickHouse Services

- ChistaDATA Database Warehousing Support and Managed Services