ClickHouse Complete Guide to LowCardinality: When and How to Use It

Introduction

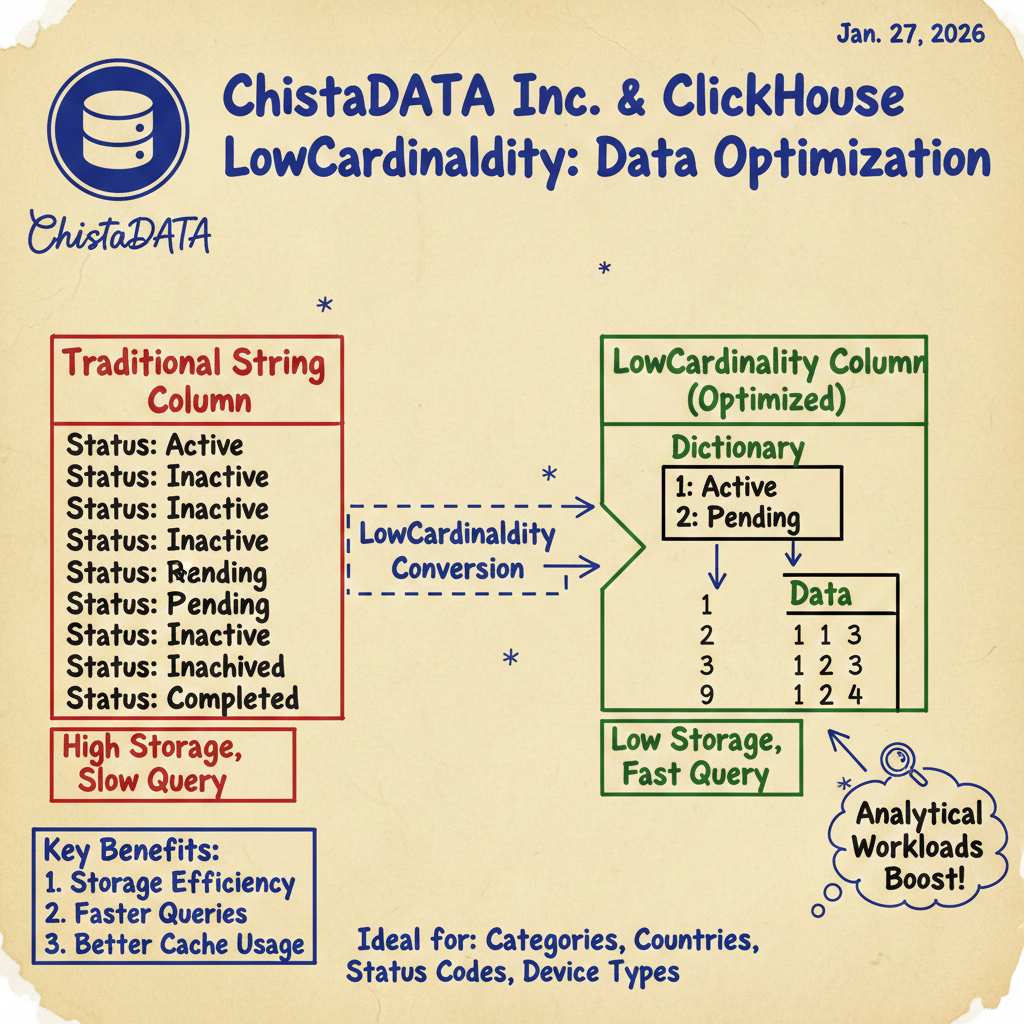

ClickHouse, the high-performance columnar database management system, offers a specialized data type called LowCardinality that serves as a powerful optimization tool for specific data patterns. This data type acts as a wrapper around string types (typically String) and implements dictionary encoding to significantly improve both storage efficiency and query performance for columns with limited unique values. Understanding when and how to leverage LowCardinality is essential for database administrators and developers seeking to optimize their ClickHouse implementations for analytical workloads.

The LowCardinality data type works by creating an internal dictionary that maps unique string values to integer positions. Instead of storing the full string repeatedly across millions of rows, ClickHouse stores only the integer position reference, dramatically reducing storage footprint and improving cache efficiency. This optimization is particularly effective for columns like status codes, categories, countries, device types, and other categorical data with limited variation. As we’ll explore in this comprehensive guide, the performance gains from proper LowCardinality usage can be substantial, making it a critical tool in the ClickHouse optimization toolkit.

How LowCardinality Works: Dictionary Encoding Explained

At its core, LowCardinality implements dictionary encoding, a compression technique that replaces repeated string values with smaller integer references. When a column is defined as LowCardinality(String), ClickHouse automatically creates and maintains an internal dictionary that maps each unique string value to a sequential integer ID. For example, a column containing country names like “United States”, “Canada”, “Mexico”, “United States”, “Canada” would be transformed so that “United States” maps to 1, “Canada” to 2, and “Mexico” to 3. The actual column data then stores these integer IDs instead of the full country names.

This approach provides multiple benefits. First, storage requirements are significantly reduced since integers require far less space than text strings, especially when the same values repeat frequently across rows. Second, operations like filtering, grouping, and joining become much faster because ClickHouse can work with compact integer values rather than performing expensive string comparisons. The optimization extends beyond simple storage savings—ClickHouse leverages the dictionary positions for various operations, including filtering and grouping, which accelerates query execution for many common analytical patterns.

The dictionary is stored separately from the main data parts and is loaded into memory when needed. ClickHouse manages this dictionary automatically, handling updates as new unique values are inserted. For optimal performance, the dictionary should ideally fit in memory, which is typically achievable for columns with reasonable cardinality. The efficiency of using LowCardinality data type depends heavily on data diversity, with the most significant benefits observed when working with columns containing fewer than 10,000 distinct values.

When to Use LowCardinality: Identifying Optimal Use Cases

Determining when to apply LowCardinality requires understanding both the technical thresholds and the characteristics of your data. The general rule of thumb is that LowCardinality is most effective for string columns with low to moderate cardinality—typically fewer than 10,000 distinct values 1. However, this threshold is not absolute and should be considered alongside other factors like value length and access patterns.

The most compelling use cases for LowCardinality involve string columns where the individual string values are relatively long compared to the number of unique values. For example, a country_name column containing full country names (“United States of America”, “United Kingdom”, “Russian Federation”) with only 200-300 unique values represents an ideal candidate. The storage savings from replacing these long strings with single-byte or two-byte integers can be dramatic. Similarly, columns containing descriptive categories, status messages, or product types with limited variation benefit significantly from this encoding.

Conversely, LowCardinality provides minimal benefit for columns with very high cardinality (approaching or exceeding 10,000 unique values) because the dictionary itself becomes large and the compression ratio decreases. It’s also less effective for very short strings (like single characters or two-letter codes) where the storage overhead of the original values is already minimal. Additionally, columns that are frequently updated may experience some overhead due to dictionary maintenance, though ClickHouse handles this efficiently in most cases.

Another important consideration is the column’s role in queries. Columns that are frequently used in WHERE clauses, GROUP BY operations, or as join keys are prime candidates for LowCardinality optimization because these operations benefit most from the faster integer comparisons. As noted in optimization best practices, ClickHouse’s primary index works most effectively when low-cardinality columns are positioned appropriately, allowing the database to skip large chunks of data efficiently during query execution.

Performance Benefits: Storage Efficiency and Query Acceleration

The adoption of LowCardinality data type delivers tangible benefits across multiple dimensions of database performance. The most immediately apparent advantage is storage efficiency. By replacing verbose string values with compact integer references, LowCardinality can reduce storage requirements by 50% or more, depending on the average string length and data distribution. This storage compression translates directly into cost savings, particularly for large-scale deployments where storage expenses represent a significant portion of the total cost of ownership.

Beyond storage savings, LowCardinality significantly accelerates query performance, particularly for SELECT operations. Operating with dictionary-encoded data increases the performance of SELECT queries for many applications because integer comparisons are inherently faster than string comparisons. This performance boost manifests in several ways: faster filtering operations in WHERE clauses, improved grouping efficiency in GROUP BY statements, and accelerated join operations when the LowCardinality column is used as a join key.

The performance gains extend to memory utilization as well. With smaller data representations, more rows can fit into CPU cache, reducing memory bandwidth requirements and improving overall system throughput. This cache efficiency is particularly valuable for analytical queries that scan large portions of data. Additionally, the compact representation allows for more effective use of vectorized query execution, where ClickHouse processes multiple values simultaneously using SIMD (Single Instruction, Multiple Data) instructions.

It’s worth noting that the performance benefits are not limited to read operations. While the primary advantages are realized during query execution, the reduced storage footprint also improves write performance indirectly by decreasing I/O requirements and allowing more efficient data compression at the part level. The overall effect is a more responsive database system capable of handling higher query volumes with the same hardware resources.

Implementation Guidelines and Best Practices

Implementing LowCardinality in your ClickHouse schema requires careful consideration of both existing data and future requirements. The data type can be applied during table creation or added to existing columns through ALTER TABLE statements, though the latter operation requires rewriting the data and should be planned accordingly. When defining a column as LowCardinality(String), the syntax is straightforward:

CREATE TABLE example_table (

id UInt64,

country_name LowCardinality(String),

status LowCardinality(String),

timestamp DateTime

) ENGINE = MergeTree()

ORDER BY (id);

For existing tables, you can modify a column to use LowCardinality:

ALTER TABLE example_table MODIFY COLUMN country_name LowCardinality(String);

When implementing LowCardinality, consider the following best practices:

- Evaluate cardinality before implementation: Use SELECT COUNT(DISTINCT column_name) to assess the number of unique values in your target column. As a general guideline, columns with fewer than 10,000 distinct values are strong candidates.

- Consider value length: The longer the average string length, the greater the potential storage savings. Short strings may not benefit significantly from the encoding overhead.

- Monitor dictionary size: While ClickHouse manages dictionaries automatically, extremely large dictionaries (approaching the 10,000 value threshold) may impact memory usage and should be monitored.

- Test with representative data: Before applying LowCardinality to production tables, test the performance impact with a representative dataset to quantify the actual benefits.

- Consider partitioning strategy: When designing partitioning keys, favor low cardinality columns as they create a manageable number of parts and improve query performance.

Common Use Cases and Practical Examples

LowCardinality finds application across numerous real-world scenarios where categorical data with limited variation is prevalent. One of the most common use cases is in web analytics platforms, where columns like browser_name, operating_system, device_type, and country typically have limited cardinality but contain relatively descriptive string values. For instance, a device_type column might contain values like “Mobile Phone”, “Tablet”, “Desktop Computer”, “Smart TV”, and “Wearable Device”—perfect candidates for dictionary encoding.

E-commerce applications benefit from LowCardinality in product categorization. Columns such as product_category, brand_name, color, and size often have constrained value sets that repeat across thousands or millions of product listings. Converting these to LowCardinality types reduces storage requirements and accelerates queries that filter or group by these attributes.

Log analysis systems represent another ideal use case. System logs frequently contain repetitive string values in fields like log_level (“INFO”, “WARNING”, “ERROR”, “DEBUG”), service_name, and event_type. These short but frequently repeated values are excellent candidates for LowCardinality optimization, improving both storage efficiency and the performance of diagnostic queries.

Time series applications also leverage LowCardinality for metadata columns. While the primary time series data might consist of numerical measurements, associated metadata like sensor_type, location_name, or measurement_unit typically has low cardinality. Optimizing these metadata columns allows for more efficient storage of the overall dataset and faster queries that filter time series by metadata attributes.

Limitations and Considerations

While LowCardinality offers significant benefits, it’s important to understand its limitations and potential drawbacks. The most significant constraint is the cardinality threshold—columns with very high numbers of unique values (typically exceeding 10,000) may not benefit from LowCardinality and could even experience performance degradation due to the overhead of maintaining a large dictionary 1.

Another consideration is the impact on certain string operations. Some string functions may execute slightly slower on LowCardinality columns because they require dictionary lookups to retrieve the original string values. However, for most analytical workloads where filtering, grouping, and aggregation are more common than complex string manipulation, this trade-off is favorable.

Memory usage is another factor to consider. While LowCardinality reduces overall storage requirements, it does require additional memory to maintain the dictionary in RAM for optimal performance. For tables with many LowCardinality columns or extremely large dictionaries, this memory overhead should be accounted for in system planning.

It’s also worth noting that LowCardinality is specifically designed for String types and cannot be applied to other data types. For categorical data stored as integers or other types, different optimization strategies may be more appropriate. Additionally, while ClickHouse handles dictionary updates efficiently, tables with extremely high update rates on LowCardinality columns might experience some overhead compared to regular string columns.

Integration with Other Optimization Techniques

LowCardinality works synergistically with other ClickHouse optimization strategies to deliver maximum performance. When combined with appropriate indexing, partitioning, and data ordering, the benefits of LowCardinality are amplified. For example, placing LowCardinality columns early in the ORDER BY clause of a MergeTree table can significantly improve the effectiveness of the primary index, allowing ClickHouse to skip large portions of data during query execution.

Partitioning strategies also benefit from LowCardinality columns. As recommended in optimization guides, choosing a low cardinality partitioning key helps maintain a manageable number of parts, which in turn improves query performance and reduces overhead associated with part management. When the partitioning key itself is a good candidate for LowCardinality encoding, applying both optimizations creates a powerful combination for large datasets.

Compression settings can also be optimized when using LowCardinality. The already compact integer representations of dictionary-encoded data respond well to ClickHouse’s various compression algorithms, potentially achieving even higher overall compression ratios. This layered approach to optimization—combining data type optimization with algorithmic compression—maximizes storage efficiency.

Conclusion

The LowCardinality data type represents a sophisticated optimization feature that addresses a common challenge in analytical database workloads: efficiently storing and querying categorical string data with limited variation. By implementing dictionary encoding transparently, ClickHouse delivers substantial improvements in both storage efficiency and query performance for appropriately selected columns.

The key to successful LowCardinality implementation lies in understanding your data characteristics and access patterns. Columns with fewer than 10,000 distinct values, particularly those containing relatively long string values, are prime candidates for this optimization 1. The resulting benefits—reduced storage costs, faster query execution, and improved memory utilization—make LowCardinality an essential tool for ClickHouse practitioners.

As with any database optimization, careful evaluation and testing are recommended before widespread deployment. By following the guidelines and best practices outlined in this comprehensive guide, you can effectively leverage LowCardinality to enhance your ClickHouse performance, delivering faster insights from your data while optimizing resource utilization. Whether you’re building a real-time analytics platform, a log processing system, or a large-scale data warehouse, understanding and applying LowCardinality appropriately can provide significant competitive advantages in terms of both performance and cost efficiency.

References

- LowCardinality(T) | ClickHouse Docs

- Lessons – performance optimization | ClickHouse Docs

- A Magical Mystery Tour of the LowCardinality Data Type – Altinity

- Reducing Clickhouse Storage Cost with the LowCardinality Type…

- Choose a Low Cardinality Partitioning Key

- LowCardinality (T) – ClickHouse Docs

Further Reading

- Setting up Alerts in ClickHouse for Performance Troubleshooting

- Troubleshooting Disk Space in ClickHouse

- Essential ClickHouse Metrics

- Boosting Materialized View Performance

- PREWHERE vs WHERE in ClickHouse Queries

You might also like:

- ClickHouse January 2023 Release – Version 23.1

- ClickHouse Security: Implementing Auditing and Log Capture

- Digital Transformation in Modern Banking with ClickHouse-powered Real-time Analytics

- Leveraging ClickHouse for Real-time Analytics on Blockchain Data

- Implementing Tiered Storage in ClickHouse: Leveraging S3 for Efficient Data Archival and Compliance